Author: Yuval Mehta

Originally published in the direction of artificial intelligence.

Machine learning can be effective when you tune models for kaggle data sets or a demonstration of GPT packaging. But in production? This is Grind.

You not only build a model. You are building a system that adopts unaffected data from real users, transforms it into distributed nodes, trains a model that does not break in half of the gears, and exceeds forecasts a day or even every hour. And that's where Pyspark MLLIB shines, not as a modeling tool, but as an infrastructure.

MLLIB in 2025: not only survival, still scaling

Pyspark MLLIB is not flashy. It will not give you any modern architecture or trick with a band. But it still supports how 90% of the enterprise machine learning actually looks like: huge data sets, repetitive training tasks, coherent pipelines and scale without excuses.

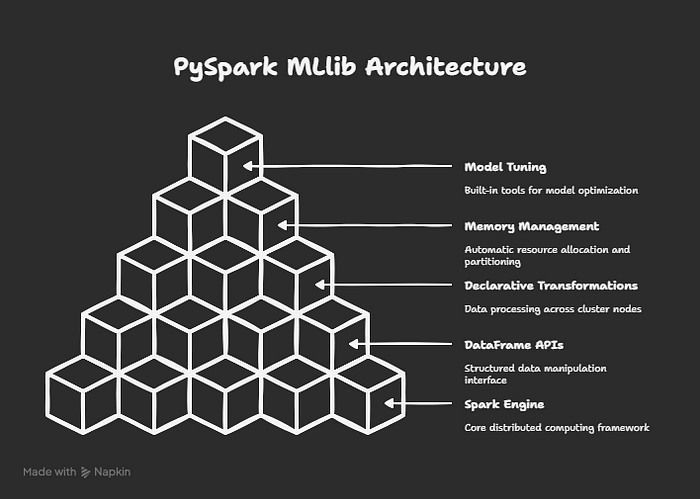

Built directly on the Spark engine, MLLIB RUROINES lever:

- API Dataframe (not RDDS anymore),

- Declarative transformations Through the knots,

- Automatic memory management and partitionAND

- Tools for tuning and assessing the model.

Everything works natively on distributed clusters. No packaging. No hacks. Only the infrastructure that has been tested in the battle for a decade.

Pipelines are a product

Forget about the model for a second.

What you really want in production is pipeline. Something that reliably:

- Byput rows of raw data worth 100 m,

- It is feutuated, coded, scales and joins them,

- Trains models in a distributed way and

- Displays forecasts without spreading a drift, scale or time.

This is a product. Not the weight of the model. Not auc validation. End of the pipeline.

MLLIB pipelines allow you to declare that all flow. Every step, from StringIndexer Down LogisticRegressionbecomes a stage. These stages fit, transform and rate on a scale, without forcing you to manually sew each part. More importantly, they are reusable, justified and implemented. No copy from notebooks to task schedules.

Why the scale requires a different way of thinking

This is a thing that most teams are wrong, moving from Scikit-Learn to MLLIB:

They treat the scale as a hardware problem, not a system problem.

But when you go from 100,000 records to 100 million, everything changes:

- The connections in memory are broken.

collect()Calls the disaster drivers.- You can't follow the line in handmade functions.

- Drif inconsistencies, oblique and pipelines destroy repeatability.

MLLIB forces you to think differently. These are rewards Declarative pipelinesIN Consistent scheme definitionsAND Clean pedigree of the function. Not because it is opinions, but because he knows what is happening when the systems become large.

2025 improvements that actually matter

Spark is evolving quietly and wisely. If you haven't touched MLLIB for several years, here's what is new and it is worth noting:

API unified only for data

Old MLLIB based on RDD is long outdated. Everything is now built on the SPARK SQL catalyst optimizer, which means that the transformations are Faster, safer and conscious memory.

Adaptive inquiry (AQE)

Spark 3.5+ optimizes your pipeline During performance. It can change connecting strategies, balance partitions and adapt to jumps based on the actual characteristics of the data, not just what you assumed.

Pandas UDF support

Yes, you can write now Pandas UDFS In your pipeline. This means that you can connect custom logic without giving up dispersed. Rare and welcome agent between ease and scale.

GPU acceleration

WITH DeepspeedTorchDistributor and Unified Exector Integration, MLLIB is now supporting training Distributed GPU. This introduces Pytorch to the flows of work in Iskra, without rewriting pipelines.

A case of use that strikes hard: forecasting resignation for 50 million customers

Let's get a specific, without code, only context.

You work in Telco. You have:

- Daily use journals (1 TB+),

- Summary of customer service chat (partly structured),

- Information on subscription and settlements (relational),

- And the model of predicting the client's departure before this happens.

What gives you MLLIB:

- Pipeline structure To encode all categorical variables in millions of users,

- Vector teams To track the functions,

- Crossvalidator To test a lot

regParamANDelasticNetParamvalues, distractedIN - And the final

.fit()It not only ends, but he does it without blending.

Your pipeline works every day. This is an automatic transfer. Logues at every step. And because it was saved as a single MLLIB object, it can be loaded tomorrow, crossed next week and served via MLFLOW or non -standard batch tasks.

This is not science fiction. This is what companies do every day, with MLLIB under the hood.

What is not mLLIB (and this is fine)

To make it clear, MLLIB is not perfect.

- It is not there Catboost or light.

- You will not find the most modern neural networks.

- Custom training loops are more difficult to write.

- His talkiness can be annoying to prototyping.

But this is the price you pay for the scale. MLLIB is not shiny. It's about stability. If you need transformers, refined embedded or generalizing zero shot, use the face.

But if your ML system must support billions of poems, act undoubtedly and integrates directly with your lake data, MLLIB is still unmatched.

Tuning is not nice-it is required

Most of the bands that complain about MLLIB “worse” missed this part.

MLLIB is equipped with native CrossValidator AND TrainValidationSplit. Use them.

Configure parameter grids. Let Spark in parallel and evaluate many models. Sure, it takes time, but saves from shipping a shortbread model that works great in a notebook and does not in large.

MLLIB also supports the assessment of the model:

BinaryClassificationEvaluatorMulticlassClassificationEvaluatorRegressionEvaluator

These evaluators calculate the indicators in distributionWithout the need to pull forecasts to one node.

On a scale it is survival.

When to use MLLIB (and when not)

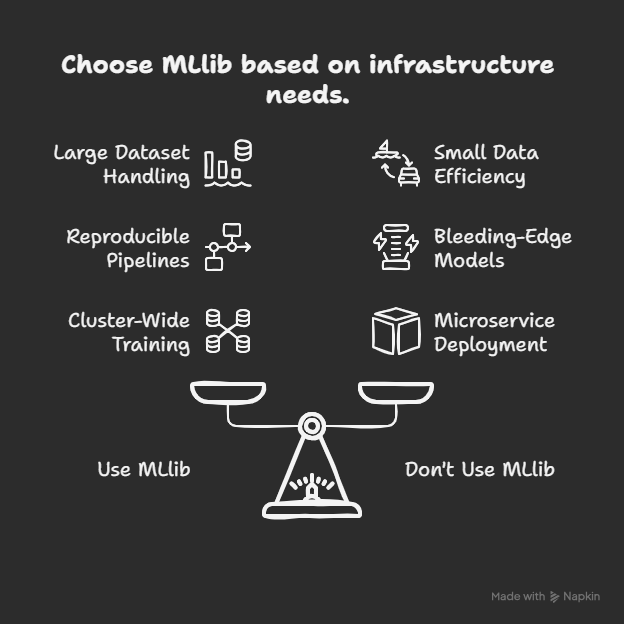

📈 Use MLLIB when:

- Your data set does not match

- You want full pipeline play

- And so you work with Spark (ETL + Modeling)

- You need training and validation of the entire cluster

- Error tolerance and work arrangement

🧪 Do not use MLLIB when:

- You need bleeding architecture

- Your data is small and rapidly moving

- You implement micros service or models refined with non -standard application logic

MLLIB is not always the best. But when it fits, it fits the infrastructure.

MLLIB is not the future of ML – this is the foundation

The tools come and go. The API model is evolving. But pipelines? The pipelines remain.

And Pyspark MLLIB gives the structure of the pipeline built to survive in versions, team transfer, data drifts and production failures.

This is not effective. This is not a bullshit. But when you are at the call at 2 am, trying to find out why your model of resignation broke after changing the scheme, a declarative, testing MLLIB flow will seem the smartest decision throughout the year.

📚 Resources for modern MLLIB engineer

Published via AI