Author(s): Aditya Gupta

Originally published on Towards AI.

Introduction

What would be easier: teaching someone to play the guitar who has already learned the piano, or teaching someone who has never touched a musical instrument? Most of us would agree that the person with piano experience would pick up guitar much faster. They already understand concepts like reading music, rhythm, and finger coordination, which transfer easily to the new instrument.

Transfer learning in artificial intelligence works in a very similar way. Training a model from scratch can be expensive and time-consuming. It requires massive amounts of data and computing resources, and even then, achieving high performance is not guaranteed. Transfer learning provides a shortcut: it allows AI models to use knowledge gained from a previous task and apply it to a new, related task.

For example, a neural network trained to recognize everyday objects in images, like cats and dogs, can be adapted to identify medical images of skin conditions without starting the training process from zero. This approach saves time, reduces the need for large datasets, and often improves model accuracy.

What Is Transfer Learning?

Think of learning to cook a new cuisine. If you already know how to cook Italian food, you don’t start from zero when learning to make Mexican dishes. You already know how to handle a knife, measure ingredients, and balance flavors. You just need to learn the specifics of the new cuisine.

Transfer learning works the same way in AI. It is the technique of taking a pre-trained model — one that has already learned to perform a task — and adapting it to a new, but related, task. Instead of training a model from scratch, the model can “reuse” what it already knows and apply it to a different problem.

Key terms to know:

- Source Task: The original task the model was trained on (for example, classifying cats and dogs).

- Target Task: The new task you want the model to perform (for example, identifying different types of animals or skin conditions).

- Knowledge Transfer: The process of applying what the model learned in the source task to the target task.

This approach is especially helpful when the new task has limited data, because the model already has a foundation of learned features. It’s like standing on the shoulders of someone who has done the hard work before you.

Why Use Transfer Learning?

Imagine you are starting a new job, but you have years of experience in a similar field. You don’t need to learn everything from scratch; your prior experience helps you adapt faster, make better decisions, and avoid common mistakes. Transfer learning works in the same way for AI models.

Here are the main benefits:

- Saves Time and Computation

Training a deep learning model from scratch can take days or even weeks and requires powerful GPUs. With transfer learning, you start with a pre-trained model that already knows a lot about patterns and features, so training on a new task is much faster. - Requires Less Data

Large datasets are often hard to collect. Transfer learning allows models to perform well even when the new task has limited data because the model is reusing knowledge learned from a larger, related dataset. - Improves Performance

Models that use transfer learning often achieve higher accuracy than models trained from scratch, especially for specialized tasks like medical image analysis or sentiment detection. - Reduces Risk of Overfitting

By using pre-trained features, the model relies on well-learned patterns rather than memorizing a small dataset, which helps it generalize better to new data.

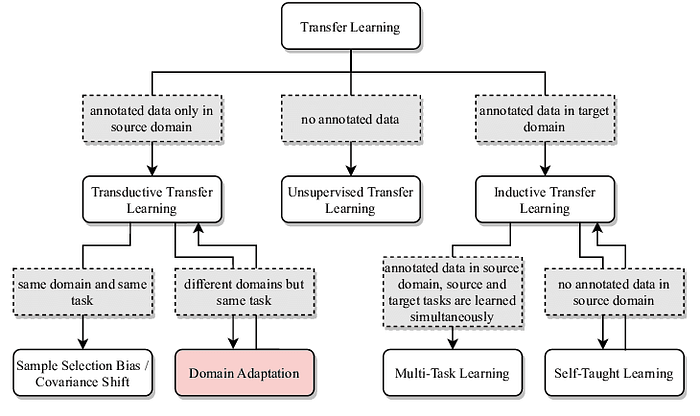

Types of Transfer Learning

Transfer learning is not a one-size-fits-all solution. Depending on the relationship between the source task and the target task, it can be classified into different types. Here are the main ones:

- Inductive Transfer Learning

- What it is: The target task is different from the source task, but labeled data is available for the target task.

- Analogy: It’s like learning to drive a car after already knowing how to ride a bike. You need to learn new rules (like traffic laws), but your existing sense of balance and control helps.

- Example in AI: Using a model trained to recognize objects in images to classify different types of animals, with labeled images for the new task.

2. Transductive Transfer Learning

- What it is: The source and target tasks are the same, but the domains differ (different data distributions). Usually, no labeled data is available for the target domain.

- Analogy: Imagine speaking English in the UK vs. in Australia. The language is the same, but accents, slang, and expressions differ. You adapt what you know to a slightly different context.

- Example in AI: Adapting a sentiment analysis model trained on movie reviews to work on product reviews without labeled product review data.

3. Unsupervised Transfer Learning

- What it is: Both source and target tasks are unsupervised, meaning no labeled data is available for either. Knowledge is transferred to improve clustering, anomaly detection, or representation learning.

- Analogy: Learning to organize your books by genre, then using the same method to organize your music collection without predefined categories.

- Example in AI: Using embeddings learned from one text corpus to cluster another corpus of unlabeled text.

4. Domain Adaptation

- What it is: The model adapts knowledge from one domain to perform well in another, often with slightly different features or input data.

- Analogy: Driving a car in a city you know well versus a new city with slightly different traffic patterns. You adjust your skills to the new environment.

- Example in AI: A model trained to detect objects in daytime images being adapted to work on nighttime images.

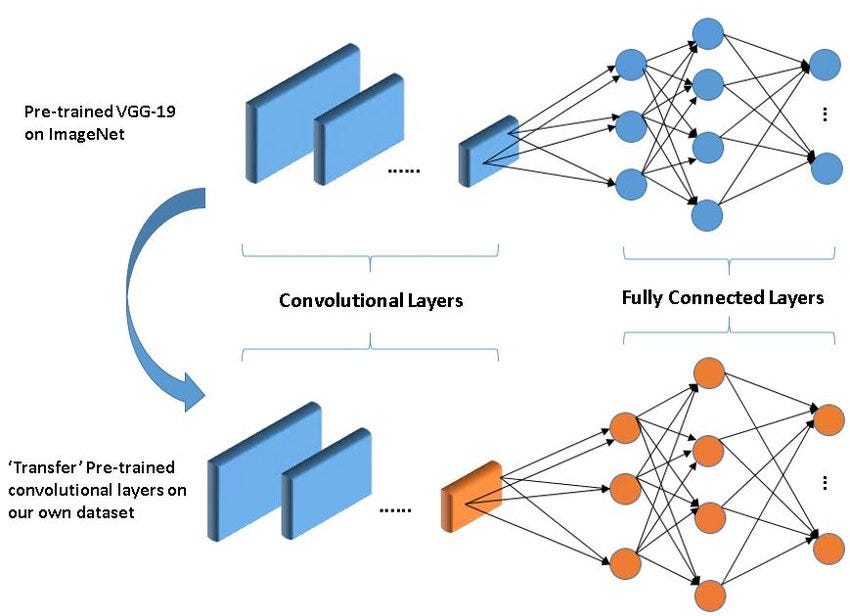

How Does Transfer Learning Work?

Transfer learning involves reusing knowledge from a model trained on one task to improve performance on a new, related task. The main steps are:

- Pre-training on the Source Task

- A model is trained on a large dataset for an initial task to learn general patterns and features.

- Example: Training a convolutional neural network (CNN) on ImageNet to classify general objects.

2. Feature Extraction

- Early layers of the pre-trained model capture general features such as edges, textures, or shapes.

- These layers are often frozen during training on the target task, and their outputs are used as features for the new task.

- Example: Using convolutional layers of a pre-trained CNN to extract features from medical images.

3. Fine-Tuning

- Later layers of the model are retrained on the target task to adapt the model’s knowledge to the new dataset.

- This allows the model to learn task-specific patterns while retaining the general features learned from the source task.

4. Target Task Prediction

- The adapted model predicts on the target dataset using both the extracted features and the fine-tuned layers.

- Example: Classifying skin lesions using a model originally trained on general object images.

5. Evaluation and Iteration

- Performance on the target task is measured using appropriate metrics (accuracy, F1-score, etc.).

- Additional fine-tuning or data augmentation can be applied if necessary to improve results.

Popular Pre-Trained Models and Their Applications

Pre-trained models are widely used in both computer vision and natural language processing because they allow developers to leverage existing knowledge without training from scratch. Here are some of the most commonly used models:

1. Image Classification (Computer Vision)

- VGG-16 / VGG-19

VGG networks are deep convolutional neural networks with 16 or 19 layers. They use small 3×3 convolution filters stacked in sequence, which helps the model learn hierarchical features from images. They are widely used for image classification and as feature extractors for transfer learning in domains like medical imaging or fine-grained object recognition. - ResNet (Residual Networks)

ResNet introduces residual connections that skip layers, which allows very deep networks to be trained without the problem of vanishing gradients. ResNet is extremely effective for image recognition, object detection, and other vision tasks requiring deep feature extraction. - Inception (GoogLeNet)

Inception networks use modules that capture features at multiple scales simultaneously. This multi-scale approach makes them efficient for large-scale image classification and transfer learning in specialized datasets. - EfficientNet

EfficientNet scales network width, depth, and resolution systematically, achieving high accuracy with fewer parameters. It is especially useful when computational efficiency is important, such as deploying models on limited-resource devices.

2. Natural Language Processing (NLP)

- BERT (Bidirectional Encoder Representations from Transformers)

BERT is a transformer-based model pre-trained on a large corpus using masked language modeling. Its bidirectional nature allows it to understand context from both left and right of a word. It is widely used for tasks like sentiment analysis, question answering, text classification, and named entity recognition. - GPT (Generative Pre-trained Transformer)

GPT is a transformer-based model trained for text generation. It predicts the next word in a sequence, which allows it to generate coherent and contextually relevant text. GPT is commonly used for text generation, summarization, conversational AI, and chatbots. - RoBERTa

RoBERTa is an optimized version of BERT with better pre-training strategies, including longer training and larger datasets. It often achieves higher accuracy than BERT on similar NLP tasks, making it ideal for text classification, Q&A systems, and more. - DistilBERT

DistilBERT is a smaller, faster, and lighter version of BERT. It retains around 95% of BERT’s performance but requires significantly less computation, making it suitable for production environments where speed and efficiency matter.

3. Other Notable Models

- YOLO (You Only Look Once) — Real-time object detection, widely used in surveillance, self-driving cars, and robotics.

- U-Net — Designed for image segmentation, especially in medical imaging for tasks like tumor or organ segmentation.

- OpenAI CLIP — A multimodal model that connects images and text, useful for image captioning, retrieval, and cross-modal understanding.

Implementing Transfer Learning with a Lightweight Pre-Trained Model

We can use MobileNetV3 Small, a compact convolutional neural network pre-trained on ImageNet. It’s fast, efficient, and perfect for demonstrating transfer learning on smaller datasets.

1. Import Libraries

import tensorflow as tf

from tensorflow.keras.applications import MobileNetV3Small

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Dense, GlobalAveragePooling2D

from tensorflow.keras.preprocessing.image import ImageDataGenerator

- TensorFlow/Keras is used for simplicity.

MobileNetV3Smallis the lightweight pre-trained model.ImageDataGeneratorhelps load and augment images.

2. Load Pre-Trained Model

# Load MobileNetV3 Small without top layers

base_model = MobileNetV3Small(weights='imagenet', include_top=False, input_shape=(224, 224, 3))

# Freeze the base model so pre-trained features are not updated

base_model.trainable = False

include_top=Falseremoves the original classification layers.- Freezing the base model ensures we only train our new layers initially.

3. Add Custom Layers

x = base_model.output

x = GlobalAveragePooling2D()(x) # Flatten feature maps

x = Dense(64, activation='relu')(x)

predictions = Dense(5, activation='softmax')(x) # Assume 5 classes

# Create the final model

model = Model(inputs=base_model.input, outputs=predictions)

GlobalAveragePooling2Dreduces each feature map to a single value.- Dense layers are added to adapt the model to your specific classification task.

4. Compile the Model

model.compile(optimizer='adam',

loss='categorical_crossentropy',

metrics=('accuracy'))

- Adam optimizer is efficient and works well for transfer learning tasks.

- Categorical crossentropy is used for multi-class classification.

5. Prepare the Data

train_datagen = ImageDataGenerator(

rescale=1./255,

rotation_range=20,

width_shift_range=0.2,

height_shift_range=0.2,

horizontal_flip=True

)

val_datagen = ImageDataGenerator(rescale=1./255)

train_generator = train_datagen.flow_from_directory(

'data/train',

target_size=(224, 224),

batch_size=32,

class_mode='categorical'

)

validation_generator = val_datagen.flow_from_directory(

'data/val',

target_size=(224, 224),

batch_size=32,

class_mode='categorical'

)

rescalenormalizes pixel values.- Data augmentation (rotation, shifts, flips) improves generalization, especially on small datasets.

6. Train the Model (Feature Extraction)

model.fit(

train_generator,

epochs=10,

validation_data=validation_generator

)

- Initial training only updates the new layers we added.

- The base model remains frozen.

7. Fine-Tuning (Optional)

# Unfreeze some layers of base_model for fine-tuning

base_model.trainable = True

for layer in base_model.layers(:-20): # Freeze first layers, fine-tune last 20

layer.trainable = False

# Recompile with a lower learning rate

model.compile(optimizer=tf.keras.optimizers.Adam(1e-5),

loss='categorical_crossentropy',

metrics=('accuracy'))

# Continue training

model.fit(

train_generator,

epochs=5,

validation_data=validation_generator

)

- Fine-tuning adapts the model to the new dataset while retaining general features.

- A smaller learning rate prevents destroying previously learned features.

Advantages and Limitations of Transfer Learning

Advantages

Saves Time and Resources

- Pre-trained models reduce the need for long training cycles and large datasets, which is especially useful for small projects or limited computing power.

Improved Performance

- Leveraging learned features from large datasets often results in higher accuracy than training from scratch, particularly on specialized tasks.

Works Well with Limited Data

- Transfer learning allows models to generalize even when the target dataset is small, thanks to knowledge transferred from larger datasets.

Flexibility

- Pre-trained models can be adapted for multiple tasks, such as classification, detection, or segmentation, making them versatile for different domains.

Limitations

Domain Mismatch

- If the source and target tasks are too different, transfer learning may not help and can even hurt performance.

Limited Control Over Features

- Early layers of pre-trained models are fixed and may not capture features specific to the new task.

Computational Resources

- While lighter than training from scratch, some pre-trained models (e.g., large transformers) can still require significant GPU memory.

Overfitting Risk During Fine-Tuning

- Fine-tuning on a very small dataset may lead the model to overfit the target task if not done carefully.

Tips for Using Transfer Learning Effectively

Choose the Right Pre-Trained Model

- Match the source task and dataset size to your target task. For lightweight tasks, use MobileNet or ResNet18; for large NLP tasks, BERT or DistilBERT works well.

Start with Feature Extraction

- Freeze the base layers initially and train only the top layers. This reduces training time and prevents destroying useful pre-trained features.

Fine-Tune Carefully

- Unfreeze only the last few layers and use a small learning rate to adapt the model to your task without losing general knowledge.

Use Data Augmentation

- Augment your dataset to improve generalization, especially if the target dataset is small.

Monitor Performance Metrics

- Track validation loss and accuracy during fine-tuning to prevent overfitting.

Conclusion / Key Takeaways

- Transfer learning is a powerful technique that allows AI models to learn faster, require less data, and achieve higher performance.

- Lightweight models like MobileNetV3 Small make transfer learning practical for a wide range of tasks, from image classification to NLP.

- Understanding the steps, benefits, and limitations ensures effective implementation and helps avoid common pitfalls.

- With careful model selection, proper fine-tuning, and data augmentation, transfer learning can significantly accelerate AI development and deployment.

To learn how to implement Transfer Learning: (VIDEO)

To read about Neural Networks: (Article)

“The greatest obstacle to discovery is not ignorance — it is the illusion of knowledge” – Daniel J. Boorstin

Published via Towards AI