Author(s): Phrugsa Limbunlom (Gift)

Originally published on Towards AI.

Enhancing visual-language reasoning through reinforcement learning optimization using a rented GPU from Vast.ai.

Table of Content

· Introduction

· Reinforcement Learning in Language models

· Group Relative Policy Optimization (GRPO)

· The intuition behind the GRPO objective function

· Practical Implementation

· Rent a GPU on Vast.AI

· Code Walkthrough

· Practical Applications

· Conclusion

Introduction

Vision-Language Models (VLMs) lies at the intersection of computer vision and natural language processing capable of understanding and generating responses based on both visual and textual inputs.

Group relative policy optimization (GRPO) is a reinforcement learning from human feedback (RLHF) method introduced in the DeepSeek paper. It is used to further train foundation models (post-training) and enhances the ability of the model by producing reasoning traces that reveal its thought process (

Post training models with GRPO offers the advantage to understand how models arrive at their answers, which is crucial for enhancing and demonstrating model transparency.

In this article, we will go through initiative behind the algorithm and the implementation to train the model with the TRL library from Hugging Face on a rented GPU.

Reinforcement Learning in Language models

Group Relative Policy Optimization (GRPO) can be understood as a type of reinforcement learning method applied to language models, and, in this context, to vision-language models (VLMs). To understand how GRPO works, it needs to review the fundamental concepts of reinforcement learning (RL).

In a typical Reinforcement Learning framework (RL), the key components include the agent, state, action, reward, policy, and trajectory. These elements can be mapped onto a language model as follows:

Agent — The agent corresponds to the model itself that learns to make decisions.

State — The state represents the input or context provided to the model (for example, a text prompt or an image-text pair).

Action — The action is the token or the output the model generates (such as a response, prediction, or reasoning step).

Reward — The reward serves as feedback that evaluates how appropriate or high-quality output of the model is. It is often based on predefined criteria or human judgment.

Policy — The policy defines how the model chooses actions based on the current state. This policy is trained during optimization and it is the strategy of the model for producing responses.

Trajectory — The trajectory is the sequence of states, actions, and rewards over time. It reflects the learning path of the model during training.

By aligning these RL components with the structure of a language or vision-language model, GRPO provides a way to guide model behavior through feedback that helps it generate more accurate, interpretable, and human-aligned outputs.

Group Relative Policy Optimization (GRPO)

Let’s understand how the GRPO works in the nutshell. Basically, this algorithm aims to teach the model learn to align with a specific goal based on the defined rewards.

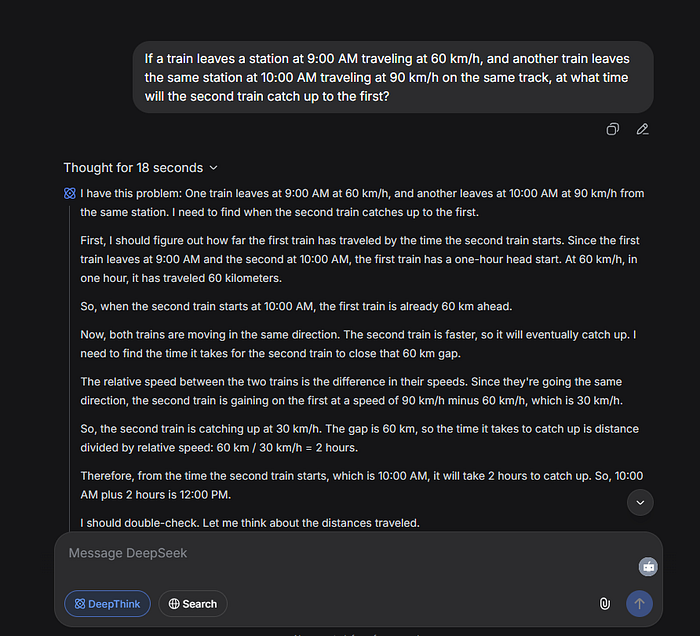

This can be seen that when a user asks the reasoning model regarding a math question, it produces both the thinking process and the final answer as the example below.

This thinking process comes from the model output responding the thinking tokens (

To make the model responds in this format, it needs to inform the model knows how to yield the right format along with learn how to come up with the correct response. By doing so, it needs to define these two criteria — format and accuracy — in the reward function where the model will learn to optimize its policy in order to receive the highest reward.

The intuition behind the GRPO objective function

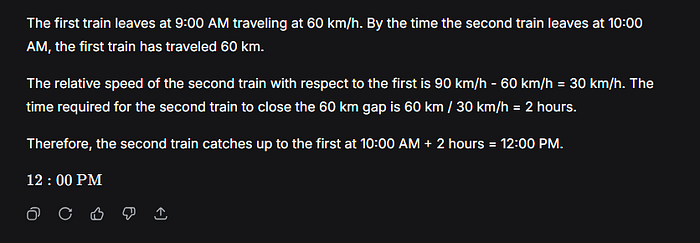

To optimize its policy, the GRPO per its name is to optimize the policy based on the group relation (Group Relative Policy Optimization). This is the main concept of the GRPO where the policy model, which is the trained model, has to produce output as a group. These outputs are used to calculate the advantage value, which is further used to optimize the model.

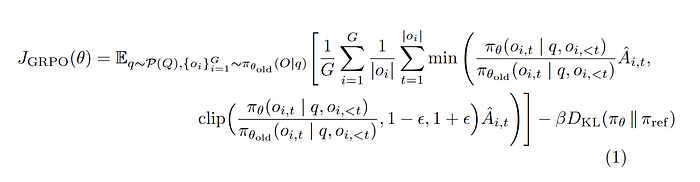

It can break down as following:

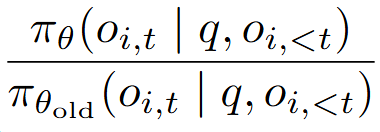

This formular calculates the ratio of the new policy (the model after update) to the old policy (the model before update) multiply by the advantage value of each token in an output within a group.

To avoid confusion, keep in mind that the policy model means the model is used to generate the output and this output used to give the feedback to the model regarding how the output aligns with the rewarding criteria.

In GRPO, the policy model has to generate multiple output as a group and each output is used to calculate each policy ratio as mentioned above and each advantage value per one token (one word) of that output (the final answer the model generates). The final product is the average of the multiplication of these two value (ratio and advantage).

Let’s say, the user input the question ‘q’ = “Explain Newton’s first law”. We need to sample the generated sample from the old policy model. Let’s say, we need ‘G’ = 3 samples and the outputs we received are as following.

o1 = An object remains at rest unless… ,

- o1,1 = Token 1 = An

- o1,2 = Token 2 = object

- o1,3 = Token 3= at

o2 = If no external force acts, motion stays…

- o2,1 = Token 1 = If

- o2,2 = Token 2 = no

- o2,3 = Token 3 = external

o3 = A body continues its state of rest or motion..

- o3,1 = Token 1 = A

- o3,2 = Token 2 = body

- o3,3 = Token 2 = continues

Then, let’s understand how policy ratio and advantage calculates.

The policy ratio is the ratio between the probabilities assigned by the new policy model (after updating its parameters) and the old policy model (before the update) at a given time step t.

In this context, the time step t corresponds to the token generated at that position, since the model generates one token at a time.

The output of the policy model is the log probability of each token generated at each position. This means that, at every time step, the model predicts a probability distribution over possible next tokens, in which the probability of the token generated at time t is selected over all possible tokens in the distribution, conditioned on the query q and the previously generated tokens oi,

The policy ratio measures how much the new model changes its probability of producing that token compared to before.

- If the ratio = 1 → There is no change.

- If the ratio > 1 → New policy increases the probability of that token will be predicted.

- If the ratio < 1 → New policy decreases the probability of that token will be generated.

The advantage quantifies how much a particular action performs well (or a token in the context of a language model ) compared to other actions (tokens). It is calculated by the difference between a reward of a given output and average rewards from all output, scaled by the standard deviation of all rewards in a group G.

Simply, in GRPO, the advantage is used to determine the probability of a particular token should be generated at a particular position based on its reward compared to the rewards of other outputs.

As such, multiplying the advantage with the policy ratio indicates how much each token contributes to the overall quality of an output, as measured by its reward relative to other outputs.

If an output receives a high reward, the model increases the probability of the tokens that produced it. Conversely, if the reward is low, the model decreases their probabilities.

Formally, it estimates how much better o_i,t performed than expected, given its context.

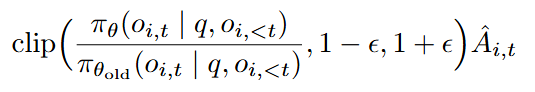

The policy ratio is not used directly after the computation. According to the equation, the policy ratio is limited to the range between 1−ϵ, 1+ϵ. If it goes to high, it is clipped to 1+ϵ. Conversely, it is clipped to 1−ϵ if it goes to low. This can prevent the model from making large, unstable updates to token probabilities that could ruin the policy.

As such, it chooses between these two products that yield the minimum value.

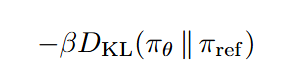

Apart from that, the Kullback–Leibler (KL) divergence is used to penalize the policy model πθ (the updated model) from deviating too far from the reference model πref (the frozen based model). This means that the current policy model update parameters based on rewards received to improve performance, whereas it subtracts rewards if the model drifts too far from its original behavior. This prevent the model to generate strange words for getting the high reward.

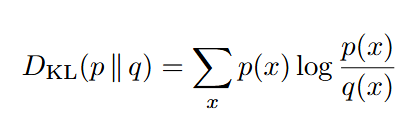

The KL divergence is calculated by using two probabilities distribution p(x) and q(x). It measures how much information is “lost” when q is used to approximate p.

When apply to GRPO, we want to see how much the output distribution (token distribution) of the current model differs from the reference model at each step.

Practical Implementation

Let’s explore how to implement GRPO for Vision-Language Models (VLMs) in the vlm-grpo repository. This setup integrates GRPO with LoRA fine-tuning to enable efficient training. We will leverage the Transformer Reinforcement Learning (TRL) library from Hugging Face and train the Qwen2 model on a rented GPU from Vast.AI. As a reference, we will follow an example from the Hugging Face’s documentation on training GRPO for VLMs.

Rent a GPU on Vast.AI

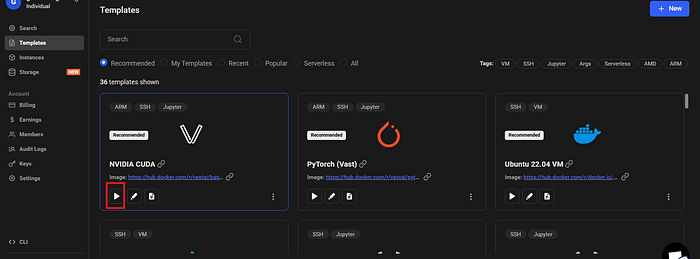

1. Initiate an instance

First, we have to initiate an instance on Vast.AI. To do so, you have to create an account and choose a template from the available temples. In this example, I chose the NVIDIA CUDA.

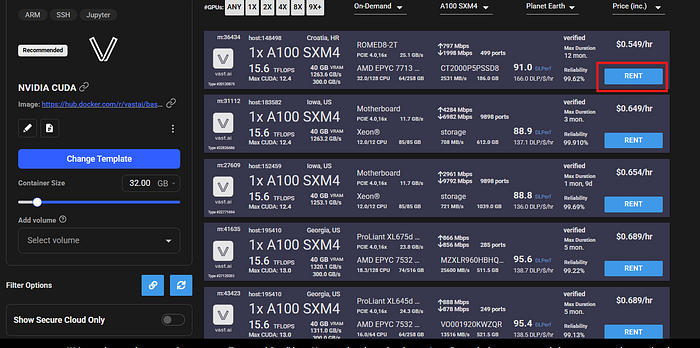

Then, select the instance that you want to rent. I chose the cheapest one with a single A100 GPU.

Note: Delete the instance when it is no longer in use to avoid unnecessary credit charges.

2. Connect to a remote server

After initiate an instance, you have to create a public key on your localhost with the following command.

ssh-keygen -t ed25519 -C "your_email@example.com"

Then, display the key generated and copy it.

cat ~/.ssh/id_ed25519.pub

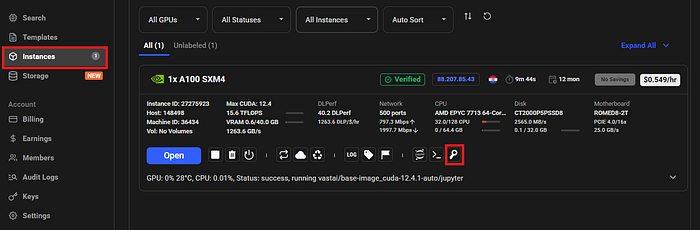

Paste the key to the remote server that you just created in Vast.ai interface.

- Instances > Add SSH key > CLOSE

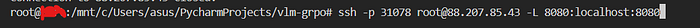

After that, connect to the remote server with SSH by running the command copy from the Vast.ai interface. (use Linux)

When you start your Vast.ai container, you will see a message like this in the terminal. It indicates that your session is running

You will also notice that a Conda (or uv) virtual environment has been automatically activated. This means your environment is ready for running Python commands.

Note: Delete the instance when it is no longer in use to avoid unnecessary credit charges.

Code Walkthrough

1. Set up the project

After connecting to the remote server, clone the repository and go to the project directory.

git clone https://github.com/phrugsa-limbunlom/vlm-grpo.gitcd vlm-grpo

Then, install all required libraries and pytorch with CUDA support.

pip install -r requirements.txtpip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu126

The key dependencies in the requirement include:

torchandtransformersfor model handlingpeftfor LoRA fine-tuningtrlfor GRPO implementationmath-verifyandlatex2sympy2-extendedfor mathematical verification

Lastly, login to your Hugging Face’s account by passing your token. This is for downloading the model from Hugging Face.

huggingface-cli login

2. Project Structure

vlm-grpo/

├── src/

│ ├── main.py # Main training and evaluation script

│ ├── vlm_grpo.py # VLM-GRPO trainer implementation

│ ├── custom_datasets.py # Dataset loading and transformation utilities

│ ├── reward.py # Reward function implementations

│ └── constants.py # Configuration constants

├── requirements.txt # Python dependencies

└── README.md

The project includes the main file for training and evaluating the model, along with the file for implementing GRPO trainer, loading dataset, reward initiation, variables configuration, which we will go through every each of it.

1. Variables Configuration

All of the required constant variables are initialized in the constants.py. These are included system prompts, training configuration, and model configuration.

class SystemPrompts:

"""System prompts for different VLM model configurations."""SYSTEM_PROMPT = (

"A conversation between User and Assistant. The user asks a question, and the Assistant solves it. The assistant "

"first thinks about the reasoning process in the mind and then provides the user with the answer. The reasoning "

"process and answer are enclosed within and tags, respectively, i.e., "

" reasoning process here answer here "

)

The system prompt defines the model’s action to generate thinking process and its answer in the

class TrainingConfig:

"""Default training configuration parameters."""LEARNING_RATE = 1e-5

EPOCHS = 1

BATCH_SIZE = 2

MAX_LENGTH = 1024

NUM_GENERATIONS = 2

MAX_PROMPT_LENGTH = 2048

LOG_STEPS = 10

SAVE_STEPS = 10

The training configuration contains all required parameters needed to train the model. The important parameters include:

- MAX_LENGTH = Maximum length of generated completions (Number of tokens per one output)

- NUM_GENERATIONS = Number of generations per prompt for reward calculation (Number of outputs needed within a group)

- MAX_PROMPT_LENGTH = Maximum length of input prompts

class ModelConfig:

"""Model and dataset configuration constants."""MODEL_ID = "Qwen/Qwen2.5-VL-3B-Instruct"

DATASET_ID = 'lmms-lab/multimodal-open-r1-8k-verified'

OUTPUT_DIR = "Qwen2.5-VL-3B-Instruct-Thinking"

USER_NAME = "YOUR_USER_NAME"

TRAINED_MODEL_ID = USER_NAME + "/" + OUTPUT_DIR

Other configurations include parameters such as the model name, dataset name, and output directory (model ID).

In this example, we use Qwen2.5-VL-3b-Instruct as the Vision-Language Model (VLM) and the lmms-lab/multimodal-open-r1–8k-verified dataset, which contains both images and text for multimodal reasoning tasks.

2. Reward Function Implementation

The system implements two primary reward mechanisms in reward.py as following.

Format Reward

Ensures outputs follow the expected

@staticmethod

def format_reward(completions: List(str), **kwargs: Any) -> List(float):

"""

Calculate format-based rewards for completions.Evaluates whether completions follow the expected format with and tags.

Args:

completions: List of completion strings to evaluate

**kwargs: Additional keyword arguments (unused)

Returns:

list(float): List of reward values where 1.0 indicates correct format and 0.0 indicates incorrect format

Example:

>>> Reward.format_reward(("reasoning \nsolution "))

(1.0)

"""

pattern: str = r"^n.*?n nn.*?n $"

matches: List(Optional(re.Match)) = (re.match(pattern, content, re.DOTALL | re.MULTILINE) for content in completions)

rewards: List(float) = (1.0 if match else 0.0 for match in matches)

return rewards

Mathematical Accuracy Reward

Verifies mathematical correctness between the generated output and the ground truth.

The verification works in two ways.

- If the content is written in LaTeX (e.g., mathematical equations), it uses a math parser (

parse(...)) and verifier (verify(gold_parsed, answer_parsed)) to check for mathematical equivalence rather than simple text matching. - If the content is not in the LaTeX format, it falls back to a case-insensitive string comparison.

The method returns a list of reward values between 0 and 1 for each comparison (or None if verification fails).

from math_verify import LatexExtractionConfig, parse, verify

from latex2sympy2_extended import NormalizationConfig@staticmethod

def accuracy_reward(completions: List(Union(str, List(Dict(str, str)))), solution: List(str), **kwargs: Any) -> List(Optional(float)):

"""

Calculate accuracy-based rewards for completions against solutions.Evaluates mathematical accuracy by parsing LaTeX expressions and verifying

mathematical equivalence. Falls back to string comparison for non-LaTeX content.Args:

completions: List of completion data structures or strings

solution: List of solution strings to compare against

**kwargs: Additional keyword arguments (unused)Returns:

list(Optional(float)): List of reward values between 0.0 and 1.0,

or None if verification failsNote:

- For LaTeX content: Uses math-verify to parse and verify mathematical equivalence

- For non-LaTeX content: Performs case-insensitive string comparison

- Returns None when mathematical verification fails due to parsing errors

"""

rewards: List(Optional(float)) = ()for completion, sol in zip(completions, solution):

try:

gold_parsed: List = parse(sol, extraction_mode="first_match") # check if it's latex-format mathematical representation

except Exception as e:

gold_parsed: List = ()if len(gold_parsed) != 0:

try:

answer_parsed: List = parse(

completion,

extraction_config=(

LatexExtractionConfig(

normalization_config=NormalizationConfig(

nits=False,

malformed_operators=False,

basic_latex=True,

boxed="all",

units=True,

),

boxed_match_priority=0,

try_extract_without_anchor=False,

)

),

extraction_mode="first_match",

)

reward: float = float(verify(gold_parsed, answer_parsed))

except Exception as e:

print(f"verify failed: {e}, answer: {completion}, gold: {sol}")

reward: Optional(float) = None

else:

# Handle case where completion might be a string or a list

completion_text: str = completion if isinstance(completion, str) else str(completion)

reward: float = float(completion_text.strip().lower() == sol.strip().lower())rewards.append(reward)

return rewards

3. Data Loading

Load the dataset in custom_dataset.py using the library below. The dataset is split into 80% for training and 20% for testing.

from datasets import load_dataset, Dataset as HFDataset, DatasetDictdef load_data(self) -> None:

"""

Load the dataset and split it into training and testing sets.Loads the first 5% of the dataset and splits it with 80% training

and 20% testing using a fixed random seed for reproducibility.

"""

dataset: HFDataset = load_dataset(self.dataset_id, split='train(:5%)')self.split_dataset = dataset.train_test_split(test_size=0.2, seed=42)

3. Data Transformation

Convert the data into the prompt format required by the VLM model, including the system prompt, image, and text. The function then returns the formatted prompt along with the corresponding image keys.

def _make_conversation(self, example: Dict(str, Any)) -> Dict(str, Any):

"""

Convert a single example into conversation format.Creates a conversation structure with system prompt, user input (image + problem),

and applies the chat template for the specific model.

Args:

example: A single dataset example containing 'problem' and 'image' fields

Returns:

dict: Transformed example with 'prompt' and 'image' keys

"""

conversation: List(Dict(str, Any)) = (

{"role": "system", "content": SystemPrompts.SYSTEM_PROMPT},

{

"role": "user",

"content": (

{"type": "image"},

{"type": "text", "text": example("problem")},

),

},

)prompt: str = self.processor.apply_chat_template(conversation, add_generation_prompt=True)

return {

"prompt": prompt,

"image": example("image")

}

3. GRPO Setup

In vlm_grpo.py, GRPO configuration and GRPO trainer are set up along with LoRA for parameter efficient training. The set up is below.

Set up LoRA using LoraConfigand apply LoRA to the model using get_peft_model.

from peft import LoraConfig, get_peft_model, PeftModeldef config_lora(self, task_type: str, r: int, alpha: int, dropout: float) -> PeftModel:

"""

Configure and apply LoRA to the model.Args:

task_type (str): The type of task (e.g., "CAUSAL_LM" for causal language modeling)

r (int): The rank of the low-rank matrices

alpha (int): The scaling factor for LoRA weights

dropout (float): Dropout probability for LoRA layersReturns:

The model with LoRA applied

"""

logger.info(f"Configuring LoRA with r={r}, alpha={alpha}, dropout={dropout}")

logger.info("Target modules: ('q_proj', 'v_proj')")lora_config: LoraConfig = LoraConfig(

task_type=task_type,

r=r,

lora_alpha=alpha,

lora_dropout=dropout,

target_modules=("q_proj", "v_proj"),

)logger.info("Applying LoRA configuration to model...")

self.model = get_peft_model(self.model, lora_config)logger.info("LoRA configuration applied successfully")

logger.info("Model trainable parameters:")

logger.info(self.model.print_trainable_parameters())return self.model

Then, call the config_lora method to apply the LoRA configuration to the downloaded model from Qwen2_5_VLForConditionalGeneration.from_pretrained(…).

The model with the applied LoRA setup is then stored in self.model.

def load_model(self) -> None:

"""

Load the pre-trained VLM model and apply LoRA configuration.Loads a Qwen2.5-VL model with bfloat16 precision and applies LoRA

with default hyperparameters (r=8, alpha=32, dropout=0.1).

"""

logger.info(f"Loading pre-trained model: {self.model_id}")

logger.info("Using bfloat16 precision and auto device mapping")try:

self.model = Qwen2_5_VLForConditionalGeneration.from_pretrained(

pretrained_model_name_or_path=self.model_id,

torch_dtype=torch.bfloat16,

device_map="auto"

)

logger.info("Base model loaded successfully")

except Exception as e:

logger.error(f"Failed to load base model: {str(e)}")

raise

logger.info("Applying LoRA configuration...")

self.model = LoRA(self.model).config_lora(

task_type="CAUSAL_LM", r=8, alpha=32, dropout=0.1

)

logger.info("Model loading and LoRA configuration completed")

After that, configure GRPO training parameters withGRPOConfig(..) by using the constants setup in constants.py.

def _config_grpo(self,

output_dir: str,

lr: float,

epochs: int,

batch_size: int,

max_length: int,

num_generations: int,

max_prompt_length: int,

log_steps: int,

save_steps: int) -> GRPOConfig:

"""

Configure GRPO training parameters.Args:

output_dir (str): Directory to save model checkpoints and logs

lr (float): Learning rate for training

epochs (int): Number of training epochs

batch_size (int): Batch size per device

max_length (int): Maximum length of generated completions

num_generations (int): Number of generations per prompt for reward calculation

max_prompt_length (int): Maximum length of input prompts

log_steps (int): Frequency of logging training metrics

save_steps (int): Frequency of saving model checkpoints

Returns:

GRPOConfig: Configured training arguments

"""

...# Configure training arguments using GRPOConfig

training_args: GRPOConfig = GRPOConfig(

output_dir=output_dir,

learning_rate=lr,

remove_unused_columns=False, # to access the solution column in accuracy_reward

num_train_epochs=epochs,

bf16=True,

# Parameters that control the data preprocessing

per_device_train_batch_size=batch_size,

max_completion_length=max_length, # default: 256

num_generations=num_generations, # default: 8

max_prompt_length=max_prompt_length,

# Parameters related to reporting and saving

report_to=("tensorboard"),

logging_steps=log_steps,

push_to_hub=True,

save_strategy="steps",

save_steps=save_steps,

)

logger.info("GRPO configuration completed successfully")

return training_args

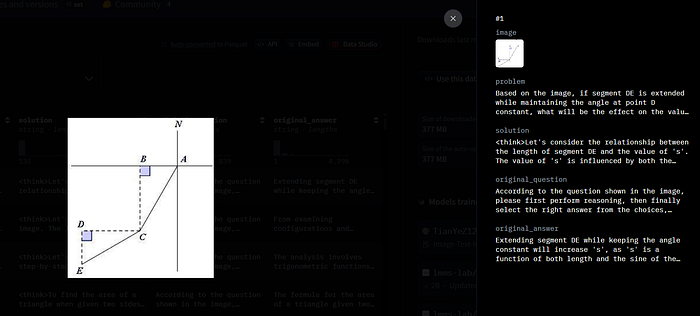

The train method handles the complete training process of the VLM model using GRPO.

It begins by configures hyperparameters through the _config_grpo method. Next, it initializes a GRPOTrainer with the model, processor, reward functions, and configuration settings.

The trainer manages the actual training procedure. It starts training by calling Trainer.train().

Throughout training, progress and checkpoints are logged and saved at specified intervals.

Once training is completed, the method saves the final trained model to the output directory (trainer.save_model(...)) and pushes it to a model hub for deployment or sharing (trainer.push_to_hub(...).

def train(self,

train_dataset: HFDataset,

output_dir: str,

learning_rate: float,

epochs: int,

batch_size: int,

max_length: int,

num_generations: int,

max_prompt_length: int,

log_steps: int,

save_steps: int) -> None:

"""

Train the VLM model using GRPO algorithm.Args:

train_dataset (HFDataset): The training dataset to use for training

output_dir (str): Directory path where training outputs will be saved

learning_rate (float): Learning rate for the optimizer during training

epochs (int): Number of complete training epochs to run

batch_size (int): Number of samples per training batch per device

max_length (int): Maximum length of generated completions during training

num_generations (int): Number of generations per prompt for reward calculation

max_prompt_length (int): Maximum length of input prompts during training

log_steps (int): Frequency of logging training metrics (every N steps)

save_steps (int): Frequency of saving model checkpoints (every N steps)

Note:

This method configures and executes GRPO training with the specified hyperparameters.

The training process will save checkpoints and logs to the specified output directory.

"""

logger.info("STARTING VLM GRPO TRAINING")# Log dataset information

logger.info(f"Training dataset size: {len(train_dataset)}")

logger.info(f"Dataset features: {train_dataset.features}")

# Configure training

train_args: GRPOConfig = self._config_grpo(

output_dir=output_dir,

lr=learning_rate,

epochs=epochs,

batch_size=batch_size,

max_length=max_length,

num_generations=num_generations,

max_prompt_length=max_prompt_length,

log_steps=log_steps,

save_steps=save_steps

)

logger.info("Initializing GRPO trainer...")

try:

trainer: GRPOTrainer = GRPOTrainer(

model=self.model,

processing_class=self.processor,

reward_funcs=(Reward.format_reward, Reward.accuracy_reward),

args=train_args,

train_dataset=train_dataset,

)

logger.info("GRPO trainer initialized successfully")

except Exception as e:

logger.error(f"Failed to initialize GRPO trainer: {str(e)}")

raise

logger.info("Starting training...")

logger.info(f"Training will run for {epochs} epochs")

logger.info(f"Model checkpoints will be saved every {save_steps} steps")

logger.info(f"Training metrics will be logged every {log_steps} steps")

try:

trainer.train()

logger.info("Training completed successfully!")

except Exception as e:

logger.error(f"Training failed: {str(e)}")

raise

# Save final model

logger.info(f"Saving final model to {train_args.output_dir}")

try:

trainer.save_model(train_args.output_dir)

logger.info("Model saved successfully")

except Exception as e:

logger.error(f"Failed to save model: {str(e)}")

raise

# Push to hub

logger.info(f"Pushing model to hub with name: {output_dir}")

try:

trainer.push_to_hub(dataset_name=output_dir)

logger.info("Model pushed to hub successfully")

except Exception as e:

logger.error(f"Failed to push model to hub: {str(e)}")

raise

logger.info("VLM GRPO TRAINING COMPLETED SUCCESSFULLY")

6. Run the Training

The training process can be initiated by executing the command below.

python src/main.py --mode train

After execution, the system will load the multimodal dataset, apply GRPO optimization with the configured reward functions, save checkpoints during training, and log training metrics for monitoring.

You can verify your trained model on Hugging Face hub by login to your account and go to your profile. You will see the model that you push to the hub.

7. Test the model

After training, the model saved on the Hugging Face Hub can be downloaded for output validation.

The model is loaded using its model ID, and inputs are first processed with the processor before generating outputs using trained_model.generate(**inputs, max_new_tokens=500).

Finally, the generated output is decoded into text using trained_processor.decode(output_ids(0), skip_special_tokens=True).

def test():

trained_model_id = ModelConfig.TRAINED_MODEL_ID

trained_model = Qwen2_5_VLForConditionalGeneration.from_pretrained(

trained_model_id,

torch_dtype="auto",

device_map="auto",

)

trained_processor = AutoProcessor.from_pretrained(trained_model_id, use_fast=True, padding_side="left")

processor = AutoProcessor.from_pretrained(model_id, use_fast=True, padding_side="left")test_dataset = dataset.get_test_dataset()

def generate_with_reasoning(problem, image):

SYSTEM_PROMPT: str = SystemPrompts.SYSTEM_PROMPT

# Conversation setting for sending to the model

conversation = (

{"role": "system", "content": SYSTEM_PROMPT},

{

"role": "user",

"content": (

{"type": "image", "image": image},

{"type": "text", "text": problem},

),

},

)

prompt = trained_processor.apply_chat_template(

conversation,

add_generation_prompt=True,

tokenize=False

)

# Process images using the process_vision_info from qwen_vl_utils

image_inputs, video_inputs = process_vision_info(conversation)

inputs = processor(

text=(prompt),

images=image_inputs,

videos=video_inputs,

padding=True,

return_tensors="pt",

)

inputs = inputs.to(trained_model.device)

# Generate text without gradients

start_time = time.time()

with torch.no_grad():

output_ids = trained_model.generate(**inputs, max_new_tokens=500)

end_time = time.time()

# Decode and extract model response

generated_text = trained_processor.decode(output_ids(0), skip_special_tokens=True)

# Get inference time

inference_duration = end_time - start_time

# Get number of generated tokens

num_input_tokens = inputs("input_ids").shape(1)

num_generated_tokens = output_ids.shape(1) - num_input_tokens

return generated_text, inference_duration, num_generated_tokens

generated_text, inference_duration, num_generated_tokens = generate_with_reasoning(test_dataset(0)('problem'),

test_dataset(0)('image'))

logger.info(generated_text)

The testing process can be initiated by executing the command below.

# Test the trained model

python src/main.py --mode test

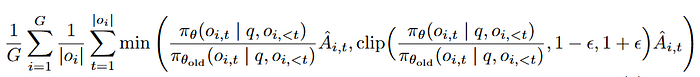

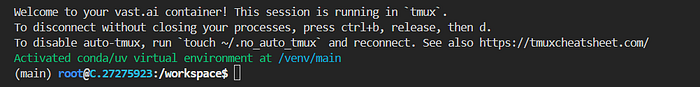

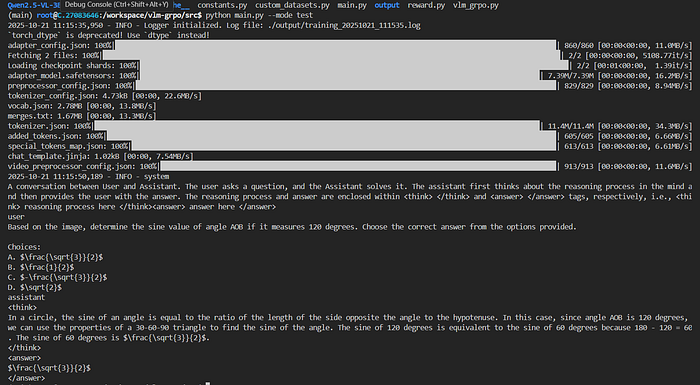

This is the example output of the sample data used for testing.

It can be seen that the model generate its thinking process in

systemA conversation between User and Assistant. The user asks a question, and the Assistant solves it. The assistant first thinks about the reasoning process in the mind and then provides the user with the answer. The reasoning process and answer are enclosed within and tags, respectively, i.e., reasoning process here answer here

user

Based on the image, determine the sine value of angle AOB if it measures 120 degrees. Choose the correct answer from the options provided.

Choices:

A. $frac{sqrt{3}}{2}$

B. $frac{1}{2}$

C. $-frac{sqrt{3}}{2}$

D. $sqrt{2}$

assistant

In a circle, the sine of an angle is equal to the ratio of the length of the side opposite the angle to the hypotenuse. In this case, since angle AOB is 120 degrees, we can use the properties of a 30-60-90 triangle to find the sine of the angle. The sine of 120 degrees is equivalent to the sine of 60 degrees because 180 - 120 = 60. The sine of 60 degrees is $frac{sqrt{3}}{2}$.

$frac{sqrt{3}}{2}$

Practical Applications

This GRPO implementation on vision-language models is particularly effective in tasks that demand strong reasoning and planning capabilities on both visuals and text, such as:

- Mathematical Problem Solving: Generating step-by-step visual reasoning to solve complex equations or geometry tasks.

- Scientific Analysis: Interpreting and reasoning over charts, graphs, and experimental visuals to form logical conclusions.

- Educational Content: Structuring visual explanations through deliberate, multi-step reasoning to enhance learning clarity.

- Technical Documentation: Analyzing and explaining technical diagrams using systematic planning and contextual understanding.

Conclusion

GRPO introduces an alternative approach to train vision-language models for reasoning tasks. It rewards thoughtful reasoning and strategic planning rather than focusing solely on output accuracy. From educational AI to scientific reasoning and technical interpretation, GRPO offers a transformative framework for developing VLMs that think, plan, and reason across both vision and language.

Reference

Published via Towards AI