Author(s): Sayanteka Chakraborty

Originally published on Towards AI.

1. Introduction

In e-commerce, success hinges on one thing: showing the right product to the right user at the right time. Whether it’s search results, recommendations, or personalized feeds, every interaction shapes how a customer feels about a brand. And behind that experience lie three pillars: relevance, personalization, and multilingual understanding.

Relevance:

When a shopper searches for “waterproof hiking backpack,” the system shouldn’t serve random fashion bags or travel rucksacks. It should surface backpacks designed for trekking and hiking, tuned to the user’s intent, not just their keywords.

Personalization:

Now imagine two users typing “smartwatch.” User A has been exploring fitness trackers, so they should see smartwatches with heart-rate monitoring, GPS, and long battery life. User B, however, has been browsing luxury accessories, so the system should recommend high-end models from Apple or TAG Heuer.

This level of intelligence comes from learning patterns hidden in browsing history, cart behavior, and demographic context.

Multilingual Understanding:

A global shopper base means diverse languages. If a user in India searches for “चाय का कप” (tea cup), they should instantly see the same results as someone searching “tea mug” in English.

To deliver this kind of smart retrieval, we need to move beyond simple keyword matching. Queries and product descriptions must be represented as vectors — mathematical representations that capture meaning and relationships between words while preserving important keyword signals.

That’s where Qdrant comes in. Acting as the engine for intelligent search and recommendation, Qdrant stores, indexes, and searches these vector representations efficiently at scale. With built-in support for sparse, dense, and hybrid retrieval, it enables developers to build real-time product recommendations, personalized search, and multilingual discovery systems that truly understand what users mean — not just what they type.

2. Lexical Retrieval vs Dense Retrieval vs Sparse Retrieval

2.1 Lexical Retrieval

Lexical retrieval converts text into bag-of-words vectors, where each word is treated as a separate dimension and assigned a statistical weight using models such as TF-IDF or BM25. Only the words that appear in the document have non-zero weights. Only words that appear in the document have non-zero weights; words absent from the document receive a weight of zero.

In this approach:

- Common words across documents get low weight because they appear frequently and carry less distinguishing power.

- Rare words get high weight, since they occur less often and are assumed to be more informative.

Example:

User Query: “Waterproof hiking backpack”

Product A:“40L trekking backpack with rain cover”

Product B:“Alpine brand leather handbag”

Product C:“Travel rucksack for camping trips”

Product B might score high because “alpine” is a rare word, even though it has nothing to do with hiking. Product C might also rank high because of the keyword “rucksack”, despite being related to general travelling and not hiking. Understanding that “trekking backpack” (Product A) is semantically the closest match to “hiking backpack” is missing here.

2.2 Dense Retrieval

Dense Retrieval represents text, such as user queries and product descriptions, as dense vectors (embeddings) in a low-dimensional space. Instead of relying on exact word matching, neural models like BERT, GPT capture the semantic meaning of text, so that texts with similar meanings have similar vector representations, even when they use completely different words.

Example:

User Query: “Waterproof hiking backpack”

Product A:“40L trekking backpack with rain cover”

Product B:“Alpine brand leather handbag”

Product C: “Travel rucksack for camping trips”

Model will give high score to Product A which is correct as it’s a backpack meant for trekking and includes a rain cover. Product B is clearly irrelevant, and the model will give it a low similarity score. Product C, however, will get a high score even though it’s a travel rucksack, not a waterproof hiking backpack. This happens because the dense model interprets “hiking backpack” and “travel rucksack” as semantically close as both represent bags for carrying items outdoors. As a result it fails to capture the specific intent that the user wants a waterproof technical hiking bag, not a travel bag for clothes.

Also, we need to remember that dense models are computationally expensive.

2.3 Sparse Retrieval

Sparse retrieval represents the new generation of search models that combine the precision of lexical retrieval (TF-IDF, BM25) with the semantic depth of dense retrieval (BERT, GPT). Instead of assigning fixed statistical weights to words, sparse neural models such as SPLADE and miniCOIL use transformer-based architectures to learn which terms are truly important and how they relate contextually to others.

Because they leverage sparse representations and rely on lightweight indexing structures (like inverted lists) rather than computationally expensive vector similarity searches, sparse retrieval is more efficient and scalable than dense models — especially during query time.

Example:

User Query: “Waterproof hiking backpack”

Product A:“40L trekking backpack with rain cover”

Product B:“Alpine brand leather handbag”

Product C: “Travel rucksack for camping trips”

Product C, which was wrongly boosted by dense retrieval in the above example, will now get a lower score since it lacks matching terms, though “hiking backpack” and “travel rucksack” are semantically close. Product A will get the highest score. Product B will get the lowest score

3. SPLADE vs miniCOIL

Both SPLADE and miniCOIL are sparse neural models, that is, they represent text as high-dimensional, sparse vectors where most word dimensions are zero, but the important words have learned weights capturing semantic meaning.

3.1 SPLADE

SPLADE stands for Sparse Lexical and Expansion Model for Information Retrieval. It is a sparse neural model in which queries and documents are represented as vectors with mostly zero values, just like TF-IDF or BM25, but the non-zero weights are learned using transformer-based models like BERT.

SPLADE also supports term expansion, meaning the model can assign weights to tokens that do not explicitly appear in the document but are contextually related to those that do. This powerful feature allows the model to better capture the semantic context of the document and improve retrieval performance.

For example:

Input: “wireless noise cancelling headphones”

SPLADE output:

#dummy weights assigned for explanation

{

wireless: 1.0,

headphones: 0.9,

bluetooth: 0.8,

audio: 0.7,

music: 0.6,

sound: 0.6,

earphones: 0.5,

noise: 0.6,

cancelling: 0.2

}

- Assigns learned weights that show how important each word is.

- Adds additional semantic terms not present in input/document such as bluetooth, music, sound ,earphones and audio.

3.2 miniCOIL

miniCOIL, unlike SPLADE, does not expand documents with new terms; it only reweights the existing words based on their contextual importance.

As a result, the index size remains smaller and more compact than that of SPLADE, enabling faster and more efficient retrieval with higher precision. However, since miniCOIL focuses solely on exact terms and lacks semantic expansion, its recall is lower compared to SPLADE, which can retrieve a broader range of semantically related results.

4. Qdrant Architecture

The diagram above represents a high-level overview of some of the main components of Qdrant.

4.1 Collection

A collection is like a table or index where related vectors are stored together. Example: A collection named products_collection might store all product vectors for an e-commerce catalog.

4.2 Distance Metrics

Qdrant uses distance metrics to compare vectors and measure similarity, particularly in the case of dense vector representations. Common metrics include cosine, dot product, and Euclidean distance. The metric is defined when the collection is created.

4.3 Point & Payload

Each point represents a single data entry that contains an ID, a vector (dense or sparse), and an optional payload (metadata).

{

"id": 101,

"vector": (0.12, -0.88, 0.55, ...),

"payload": {

"title": "Waterproof Hiking Backpack 30L",

"brand": "WildTrail",

"category": "Backpacks",

"price": 1999,

"language": "en",

"rating": 4.5,

"in_stock": true

}

}

The JSON object above represents a point in Qdrant. The vector is a numerical representation of the product’s title or description. Each point corresponds to one product in the search system. When many such points are stored together, they form a collection named products_collection.

A payload is the additional JSON metadata attached to a vector, used for filtering, ranking, or applying business logic during search.

5. Product Search Using Qdrant

Let us now explore a comparative study of lexical search, dense retrieval, and sparse neural retrieval using Qdrant for an e-commerce use case.

Install the following Python libraries:

! pip install qdrant-client

! pip install sentence-transformers

! pip install transformers

Load the required Python libraries:

from qdrant_client import QdrantClient, models

from qdrant_client.models import PointStruct, SparseVector,VectorParams, Distance

from sklearn.feature_extraction.text import TfidfVectorizer

from sentence_transformers import SentenceTransformer

from transformers import AutoModelForMaskedLM, AutoTokenizer

import torch

import numpy as np

Initialize the Qdrant client:

client = QdrantClient(":memory:")

Create dummy data for e-commerce:

products = (

{"id": "S001", "title": "Nike Air Zoom Pegasus", "description": "Lightweight breathable running shoes", "brand": "Nike", "category": "Running", "price": 8999},

{"id": "S002", "title": "Adidas Ultraboost 5.0", "description": "Cushioned marathon runner with energy return", "brand": "Adidas", "category": "Running", "price": 12999},

{"id": "S003", "title": "ASICS Gel-Kayano Stability", "description": "Comfortable long distance support running shoes", "brand": "ASICS", "category": "Running", "price": 11999},

{"id": "S004", "title": "Puma Velocity Nitro 2", "description": "Nitro foam responsive daily trainer", "brand": "Puma", "category": "Running", "price": 8499},

{"id": "S005", "title": "Skechers GoWalk", "description": "Soft cushioned walking shoes", "brand": "Skechers", "category": "Walking", "price": 4999},

{"id": "S006", "title": "Clarks Oxford Leather", "description": "Classic formal leather shoes", "brand": "Clarks", "category": "Formal", "price": 7499},

{"id": "S007", "title": "Woodland Trek Waterproof", "description": "Rugged waterproof trekking boots", "brand": "Woodland", "category": "Outdoor", "price": 6999},

{"id": "S008", "title": "Converse Chuck Taylor", "description": "Iconic casual canvas sneakers", "brand": "Converse", "category": "Casual", "price": 3999},

{"id": "S009", "title": "Nike Metcon Trainer", "description": "Stable cross-training gym shoes", "brand": "Nike", "category": "Training", "price": 7999},

{"id": "S010", "title": "Adidas Terrex Trail GTX", "description": "Waterproof trail running shoes with grip", "brand": "Adidas", "category": "Trail", "price": 13999}

)texts = (p("title") + " " + p("description") for p in products)

5.1 Lexical Search(TF-IDF)

vectorizer = TfidfVectorizer()

tfidf_matrix = vectorizer.fit_transform(texts)

print(tfidf_matrix.shape)#create collection

client.recreate_collection(

collection_name="products_collection",

vectors_config={},

sparse_vectors_config={"tfidf": models.SparseVectorParams(

index=models.SparseIndexParams(

on_disk=False,

)

)

},

)

#insert products data

client.upsert(

collection_name="products_collection",

points=(

models.PointStruct(

id=i,

payload=product,

vector={

"tfidf": models.SparseVector(

indices=tfidf_matrix(i).tocoo().col.tolist(),

values=tfidf_matrix(i).tocoo().data.tolist()

)

}

)

for i, product in enumerate(products)

)

)

Output:

(10, 62)

No of unique words(Bag -of-words)=62

No of products=10

indices are the positions of non-zero terms in the vector and values are the importance weights for each index.

query_text = "waterproof running shoes"

query_vector = vectorizer.transform((query_text)).tocoo()

print(query_vector.shape)

print(query_vector.col.tolist())

print(query_vector.data.tolist()) # Searching for similar documents

result = client.search(

collection_name="products_collection",

query_vector=models.NamedSparseVector(

name="tfidf",

vector=models.SparseVector(

indices=query_vector.col.tolist(),

values=query_vector.data.tolist(),

),

),

limit=3,

)

result

Output:

(1, 62)

(40, 41, 58)

(0.594703257324028, 0.4292602362938498, 0.6797526647723708)

The above output means that the word “waterproof” appears at index 40 with a weight of 0.59, “running” appears at index 41 with a weight of 0.43, and so on.

5.2 Dense Retrieval

bert_model = SentenceTransformer("sentence-transformers/all-MiniLM-L6-v2")client.recreate_collection(

collection_name="products_collection",

vectors_config={

"dense": models.VectorParams(

size=384,

distance=models.Distance.COSINE

)

}

)

The collection is created to store 384 dimensional dense vectors using cosine similarity for semantic search.

client.upsert(

collection_name="products_collection",

points=(

models.PointStruct(

id=i,

payload=product,

vector={

"dense": bert_model.encode(product("title") + " " + product("description")).tolist()

}

)

for i, product in enumerate(products)

)

)

query_text = "waterproof running shoes"

query_vector = bert_model.encode(query_text).tolist()result = client.search(

collection_name="products_collection",

query_vector=models.NamedVector(

name="dense",

vector=query_vector

),

limit=3

)

result

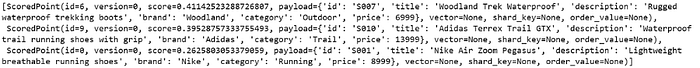

Output:

5.3 Sparse Neural Retrieval(SPLADE)

splade_model_name = "naver/splade-cocondenser-ensembledistil"

splade_tokenizer = AutoTokenizer.from_pretrained(splade_model_name)

splade_model = AutoModelForMaskedLM.from_pretrained(splade_model_name)client.recreate_collection(

collection_name="products_collection",

vectors_config={},

sparse_vectors_config={"splade": models.SparseVectorParams()}

)

points = ()

for i, product in enumerate(products):

splade_vec = encode_splade(product("title") + " " + product("description"))

points.append(

models.PointStruct(

id=i,

payload=product,

vector={"splade": splade_vec}

)

)

client.upsert(collection_name="products_collection", points=points)

query_text = "waterproof running shoes"def encode_splade(text):

inputs = splade_tokenizer(text, return_tensors="pt", truncation=True)

with torch.no_grad():

logits = splade_model(**inputs).logits.squeeze()

max_values, _ = torch.max(torch.relu(logits), dim=0)

max_values = max_values.numpy()

indices = np.nonzero(max_values)(0)

values = max_values(indices)

return models.SparseVector(indices=indices.tolist(), values=values.tolist())

query_splade_vec = encode_splade(query_text)

result = client.search(

collection_name="products_collection",

query_vector=models.NamedSparseVector(

name="splade",

vector=models.SparseVector(

indices=query_splade_vec.indices,

values=query_splade_vec.values

),

),

limit=3,

)

for item in result:

print(item)

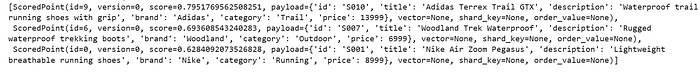

Output:

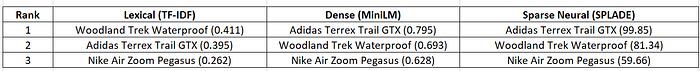

5.4 Ranked Results Comparison

Lexical retrieval matches exact keywords such as “waterproof” and “running” but lacks semantic understanding. As a result, it may rank Woodland Trek Waterproof above Adidas Terrex Trail GTX simply because of keyword overlap.

Dense vector retrieval captures semantic meaning but may overlook exact keyword matches.

Sparse neural vector representations (such as SPLADE) combine both strengths — they understand exact terms (e.g., “waterproof”) as well as semantically related ones (e.g., “GTX”, “trail”). Additionally, they offer faster retrieval than dense semantic models, making them both efficient and highly relevant for search applications.

6. Hybrid Retrieval for Multilingual Search -Why Hybrid Approach?

Sparse neural models excel at keyword matching while maintaining contextual relevance, but they do not capture cross-language semantics or translation. Dense multilingual embeddings, on the other hand, capture meaning and context across different languages but often lack precision in keyword matching.

A hybrid retrieval approach combines the best of both worlds — leveraging the contextual and lexical precision of sparse neural models with the semantic and multilingual understanding of dense embeddings. This results in search outcomes that are both precise and meaningfully aligned across languages.

Let’s understand this through a practical e-commerce use case:

Multilingual E-commerce Product Dataset:

products = (

{

"id": 1,

"title": "Waterproof hiking backpack 40L",

"desc": "Durable backpack with rain cover, ideal for trekking and outdoor use",

"brand": "TrailPro", "category": "Backpacks", "price": 3499, "lang": "en"

},

{

"id": 2,

"title": "Leather fashion handbag for women",

"desc": "Premium leather handbag for daily use",

"brand": "Modish", "category": "Handbags", "price": 4999, "lang": "en"

},

{

"id": 3,

"title": "जलरोधक ट्रेकिंग बैकपैक 35L",

"desc": "बारिश के लिए रेन कवर सहित हल्का ट्रेकिंग बैग",

"brand": "TrailPro", "category": "Backpacks", "price": 3299, "lang": "hi"

},

{

"id": 4,

"title": "ब्लूटूथ वायरलेस हेडफ़ोन",

"desc": "लंबी बैटरी लाइफ वाले शोर रद्द करने वाले हेडफ़ोन",

"brand": "SoundBeats", "category": "Headphones", "price": 2599, "lang": "hi"

},

{

"id": 5,

"title": "Bluetooth noise-cancelling headphones",

"desc": "Wireless over-ear headphones with long battery life and ANC",

"brand": "SoundBeats", "category": "Headphones", "price": 2699, "lang": "en"

},

)

Loading Multilingual Dense and Sparse Neural Models for Hybrid Retrieval:

from transformers import AutoTokenizer, AutoModelForMaskedLM

dense_model = SentenceTransformer("sentence-transformers/paraphrase-multilingual-MiniLM-L12-v2")

tokenizer = AutoTokenizer.from_pretrained("naver/splade_v2_distil")

sparse_model = AutoModelForMaskedLM.from_pretrained("naver/splade_v2_distil")def encode_splade(text):

inputs = splade_tokenizer(text, return_tensors="pt", truncation=True)

with torch.no_grad():

logits = splade_model(**inputs).logits.squeeze()

max_values, _ = torch.max(torch.relu(logits), dim=0)

max_values = max_values.numpy()

indices = np.nonzero(max_values)(0)

values = max_values(indices)

return models.SparseVector(indices=indices.tolist(), values=values.tolist())

Defining Collection:

from qdrant_client import QdrantClientclient = QdrantClient(":memory:")

client.recreate_collection(

collection_name="products_collection_multilingual",

vectors_config={"dense": VectorParams(size=384, distance=Distance.COSINE)},

sparse_vectors_config={"sparse": models.SparseVectorParams()}

)

Adding data to collection:

from qdrant_client.models import PointStruct, SparseVector, VectorParams, Distance

points = ()

for i, product in enumerate(products):

points.append(

models.PointStruct(

id=i,

vector={"dense": dense_model.encode(product("title") + " " + product("desc")).tolist(),"sparse":encode_splade(product("title") + " " + product("desc"))},payload={

"title": product("title"),

"brand": product("brand"),

"category": product("category"),

"price": product("price"),

"lang": product("lang")

}

)

)

client.upsert(collection_name="products_collection_multilingual", points=points)

Searching Query:

query_text = "earphones"query_splade_vec = encode_splade(query_text)

result_sparse = client.search(

collection_name="products_collection_multilingual",

query_vector=models.NamedSparseVector(

name="sparse",

vector=models.SparseVector(

indices=query_splade_vec.indices,

values=query_splade_vec.values

),

),

limit=3,

)

query_vector = dense_model.encode(query_text).tolist()

result_dense = client.search(

collection_name="products_collection_multilingual",

query_vector=models.NamedVector(

name="dense",

vector=query_vector

),

limit=3

)

-------------Helper Functions-------------

def normalize_scores(points):

"""

Normalize scores from any retriever (dense or sparse) to (0, 1).

Works even if scores vary wildly in scale or sign.

"""

if not points:

return {}

scores = (p.score for p in points)

min_s, max_s = min(scores), max(scores)

denom = (max_s - min_s) if (max_s - min_s) != 0 else 1e-9

return {p.id: (p.score - min_s) / denom for p in points}

def hybrid_score_fusion(result_sparse, result_dense, w_sparse=0.5, w_dense=0.5):

"""

Combine sparse and dense search results using normalized weighted fusion.

Parameters:

w_sparse : float - weight for sparse retriever

w_dense : float - weight for dense retriever

Returns:

list(tuple(id, combined_score)) sorted descending

"""

sparse_norm = normalize_scores(result_sparse.points if hasattr(result_sparse, "points") else result_sparse)

dense_norm = normalize_scores(result_dense.points if hasattr(result_dense, "points") else result_dense)

all_ids = set(sparse_norm.keys()) | set(dense_norm.keys())

combined = {}

for pid in all_ids:

s_score = sparse_norm.get(pid, 0.0)

d_score = dense_norm.get(pid, 0.0)

combined(pid) = (w_sparse * s_score) + (w_dense * d_score)

fused = sorted(combined.items(), key=lambda x: x(1), reverse=True)

return fused

fusion = hybrid_score_fusion(result_sparse, result_dense, w_sparse=0.4, w_dense=0.6)

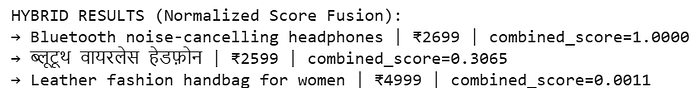

print("nHYBRID RESULTS (Normalized Score Fusion):")

for pid, score in fusion:

doc = client.retrieve("products_collection_multilingual", ids=(pid))(0)

print(f"→ {doc.payload('title')} | ₹{doc.payload('price')} | combined_score={score:.4f}")

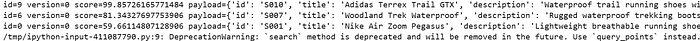

Output:

The user query is processed through a sparse neural model for keyword precision and semantic understanding, and a dense multilingual model for semantic and cross-language comprehension.

Since both models produce scores on different scales, the scores are normalized to a common range (0,1) to ensure fair contribution from both systems during result fusion.

Weighted score blending then combines the lexical precision of the sparse model with the semantic depth of the dense model, producing a unified and balanced ranking.

The final hybrid results outperform individual models, demonstrating cross-lingual accuracy, context-aware retrieval, and high precision for multilingual product search in e-commerce.

7. Conclusion

In modern e-commerce, users expect search results that think like they do — relevant, personalized, and multilingual. Traditional keyword-based search (lexical retrieval) hits exact matches but misses intent. Dense vector search captures meaning but can overlook crucial keywords and domain nuances. Sparse neural retrieval brings the best of both worlds — combining keyword precision with semantic intelligence to deliver results that feel natural and context-aware.

This is where Qdrant steps in as the engine behind next-generation product discovery. It unifies sparse, dense, and hybrid retrieval in a single, developer-friendly framework: no complex setup, no trade-offs. By fusing sparse inverted indexing with optimized ANN search, Qdrant makes hybrid queries blazing fast, often returning results in milliseconds even across millions of products.

For developers, this means you can build intelligent, low-latency, multilingual search systems that scale effortlessly — whether you’re recommending sneakers, gadgets, or luxury travel gear. Qdrant lets you move beyond search that just matches words to search that truly understands meaning.

8. References

Published via Towards AI