Mit Computer Science and Artificial Intelligence Laboratory (CSAIL) introduced a groundbreaking framework called Distribution that matches the distribution (DMD). This innovative approach simplifies the traditional multi -stage diffusion models process in one step, dealing with earlier restrictions.

Traditionally, image generation was a complex and intense process, including many iterations to improve the final result. However, the newly developed DMD framework simplifies this process, significantly shortening the calculation time while maintaining the quality of generated images. Leaded by Tianwei Yin, PhD student, the research team achieved an extraordinary feat: accelerating current diffusion models, such as stable diffusion and Dall-E-3 by stunning 30 times. Just compare the results of the production of a stable diffusion (image on the left) after 50 steps and DMD (image on the right) one step. Quality and details are amazing!

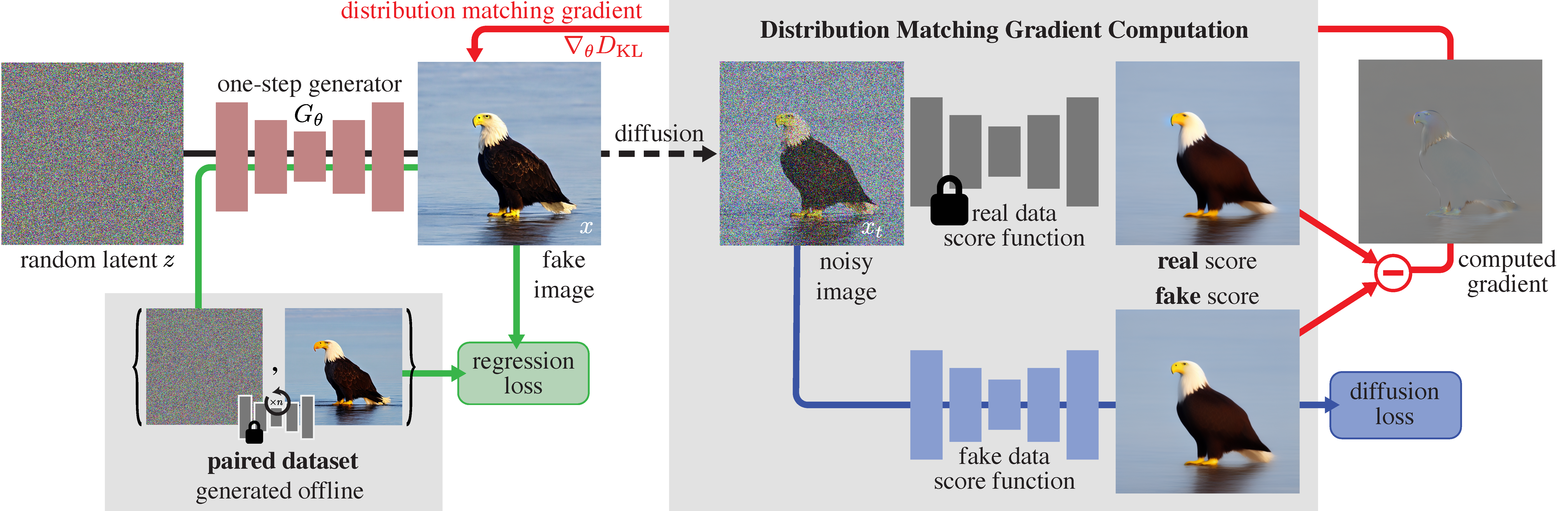

The key to DMD's success is his Innovative approachwhich combines rules from generative opposing networks (gan) with the principles of diffusion models. DMD, by distilling knowledge about more complex models, faster, DMD achieves generation of visual content in one step.

But how does DMD achieve this feat? Connects two elements:

1

2. Loss of matching distribution: equals the probability of generating an image with a student model to its actual frequency.

Thanks to the use of two diffusion models as DMD guides, it minimizes the discrepancy of the distribution between generated and real images, which causes faster generation without harm to quality.

In their studies, Yin and his colleagues showed the effectiveness of DMD on various comparative tests. In particular, DMD showed a consistent performance of popular reference points, such as ImageNet, achieving the result of the distance of Fréchet (FID) of only 0.3 – testimony of quality and diversity of generated images. In addition, DMD was distinguished by generating text on an industrial scale, showing its versatility and use in the real world.

Despite unusual achievements, the DMD performance is internally associated with the capabilities of the teacher model used during the distillation process. While the current version uses a stable diffusion of V1.5 as a teacher model, future iterations can use more advanced models, unlocking the new possibilities of visual edition in real time high quality.