A recent article from a review published in Intelligent Computing sheds light on the developing field Deep active learning (Deepal), which integrates the principles of active learning with deep learning techniques to optimize sample selection in neural networks training in the scope of AI tasks.

Deep learning, known for its ability to learn complicated patterns from data, has long been hailed as a breakthrough of the game in artificial intelligence. However, its effectiveness is based on a large number of marked data for training, a process requiring resources. You can learn more about deep learning in our article, machine learning and deep learning: learn the differences.

On the other hand, active learning offers a solution by strategic selection of the most informative samples for annotation, thus reducing the annotation load.

By combining strengths of deep learning with the performance of active learning as part of the foundation models, scientists unlock new AI research and applications. Foundation models, such as OpenAI's GPT-3 and Google's Bert, are initially trained on huge data sets and have unparalleled possibilities in natural language processing and other domains with minimal tuning.

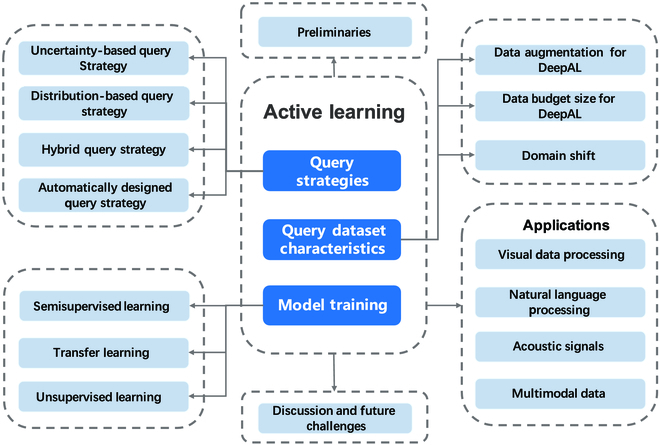

Dig. 1 Schematic deep structure

Deep active learning strategies are divided into four types: based on uncertainty, based on distribution, hybrid and automatically designed. While strategies based on uncertainty focus on samples of high uncertainty, strategies based on distribution priority are representative samples. Hybrid approaches combine both indicators, and automatically designed strategies use meta-tuition or reinforcement learning to choose from adaptive.

When it comes to model training, scientists discuss the integration of deep active learning with existing methods, such as partially supervised, transferring and without supervision of learning optimization. It emphasizes the need to extend deep active learning beyond the specific models for the task to include comprehensive foundation models for more effective AI training.

One of the main advantages of integration of deep learning with active learning is a significant reduction in annual efforts. Using the richness of knowledge encoded in foundation models, active learning algorithms can intelligently choose samples that offer valuable observations, improving the process of annotation and accelerating the training of the model.

In addition, this combination of methodology leads to an improvement in the performance of the model. Active learning ensures that the marked data used for training are varied and representative, which causes better generalization and increased accuracy of the model. Thanks to foundation models providing solid learning algorithms, they can use rich representations learned during initial training, providing more solid AI systems.

Profitability is another important benefit. By reducing the need for intensive manual annotation, active learning significantly reduces the total cost of development and implementation of the model. This democratizes access to advanced AI technologies, making them more available to a wider scope of organizations and people.

In addition, real -time feedback loop enabled by active learning promotes iterative improvement and continuous learning. Because the model interacts with users to choose and mark samples, it decreases its understanding of data distribution and adapts its forecasts accordingly. This dynamic feedback mechanism increases the agility and reaction of AI systems, enabling them to evolution along with evolving data landscapes.

However, there are challenges related to the use of the full potential of deep learning and active learning using foundation models. Accurate estimation of the model's uncertainty, choosing the right experts for annotations and designing effective strategies of active learning are key areas that require further exploration and innovation.

To sum up, the convergence of deep learning and active learning in the era of foundation models is a significant milestone in AI research and applications. Using the possibilities of foundation models and the performance of active learning, scientists and practitioners can maximize the performance of models training, improve performance and increase innovation in various domains.