Author's): Towards the AI editorial office

Originally published in Towards Artificial Intelligence.

Our AI team participated in COLM 2025 this year. In this piece François Huppé-Marcouxone of our AI engineers, shares the “aha” moment that changed his view of reasoning in higher education.

We talk about “reasoning” as if it were universal reinforcement: add more steps, get a better answer. But reasoning is not one thing. Sometimes it's silent, automatic pattern matching that allows you to spot a friend in a crowd. Sometimes it's a deliberate, step-by-step analysis—checking assumptions, connecting the dots, checking edge cases. They both reason. It is a mistake to treat only the slow, verbal form as “real”.

Everyday life makes the split obvious. You don't tell us how to catch a thrown set of keys; your body just does it. You Down tell me about your taxes. In one case, forcing constant commentary would make the situation worse; in the second case, not writing down the steps would be reckless. The skill is not “always think more.” Knowing when thinking out loud helps and when it injects noise.

Large language models tempt us to ask for “step by step” for everything. This sounds prudent, but it can degenerate into cargo cult analysis: extra words in place of better judgment. If the task is primarily matching or recognition, requesting a verbal train of thought can distract the system (and us) from the signal we actually need. For multi-constraint problems, omitting the structure results in certain nonsense.

I learned this the hard way mid-lecture, with a room full of people humming their answers while I was still mentally building the proof.

I attended Tom Griffiths COLM 2025 lecture on neuroscience and LLM. He argued this reasoning is not always beneficial for LLM. I was surprised by this. Reasoning is generally assumed to enhance the model's capabilities by enabling it to generate intermediate thoughts before the actual response.

However, during the interview, he conducted an experiment that changed my mind and the way I prompt LLM. He showed strings of characters from an invented language with hidden rules specifying which letters could appear before or after others. He then showed two suggested words and asked which one fits.

I began to analyze the problem, considering the tools available to solve it. I remembered the compiler class where we built a small programming language from scratch, and the concept of an a-file deterministic finite automaton (DFA) – a way to define which strings are valid in a given language. For example, in Python you cannot start a variable name with a number. We use automata to parse and throw compilation errors such as “missing semicolon” (in languages that use them) or “incorrect indentation”.

So I started checking the first word, checking whether the letter strings it contained were present in the sample text. Halfway through, I heard the room say, “Hmm!” I completely missed the fact that the presenter asked everyone to hum if they thought the first word was correct. I was surprised that people responded so quickly. Was I just slow? Or maybe I misunderstood the exercise?

A little later I realized that no one acted the same way as me. People answered without certainty, just looking and guessing. The room almost immediately longed for option one, and they were right. I got there later, with certainty and explanation, but I was slow.

The lesson was not that rigor is bad; the point is that second-guessing has its price, and sometimes recognizing a pattern quickly is a winning strategy.

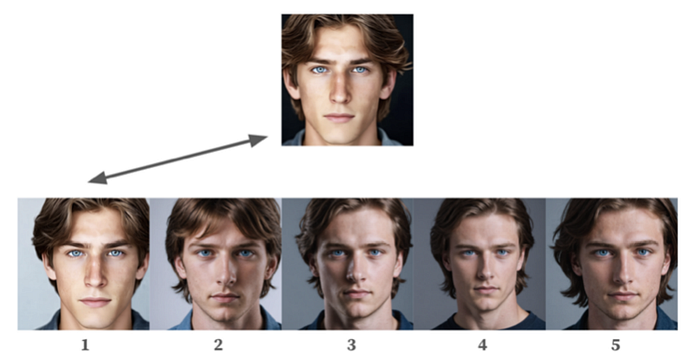

Griffiths took this further by giving the example of visionary language. He found that VLMs performed better on certain tasks without explicit “thinking” and reasoning. In one experiment, he showed multiple faces to a vision-linguistic model and asked it to identify which of them matched the real input image.

He found that both the model and people performed better when they did not engage in verbal reasoning.

In psychology and neuroscience, there is a well-known concept of System 1 and System 2 Thinking, fast and slow by Daniel Kahneman. Quick thinking (System 1) is effortless and always active – recognizing a face, answering 2+2=4, or performing practiced sports movements. There is little conscious consideration, even though the brain is actively responding. Slow thinking (System 2) is when we need to mentally process information, such as solving the “37*13” problem or doing a letter-by-letter analysis to find which sequences violate the language.

Over the years, this concept has been applied to artificial intelligence. Artificial intelligence can match images better when “thinking” is turned off. As with humans, asking the model to describe facial features in words (e.g. green eyes, brown hair, small nose) can add noise instead of simply comparing images.

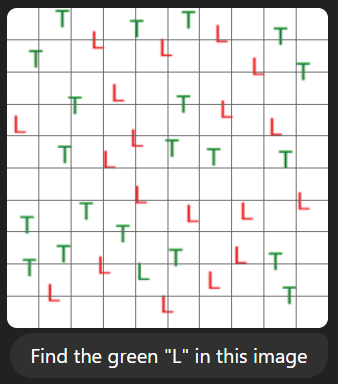

Let's do one last experiment to make the mechanics obvious: on the left side, “find the green dot”; on the right side “find the green L” The first is effectively OR search, i.e. any green thing will do. The second one is AND search, which means it must be both L AND green. As the number of elements increases, accuracy remains high for OR but drops sharply for AND. In plain language: the more conditions you put together, the more the model benefits from (and sometimes requires) thoughtful “thinking” and the more fragile it becomes if you don't provide it.

With the left task you can see how quickly it was completed; there is no need to think about each district. The actual task is not that simple: we have to check each letter one by one to find the green “L”. Here, Griffiths distinguishes between two types of searches:

Disjoint search (image on the left): Search with “OR” condition. Here the only condition is “find the green circles”. This is wider search where any condition can be met.

Combined search (right image): Search with “AND” condition. Here we are looking for “L” AND green. This is narrower search where all conditions must be met.

He thinks it's for this disjoint searchVLM accuracy does not deteriorate as the number of objects increases, however combined search accuracy drops significantly for larger numbers of objects. I'm writing:

This pattern suggests interference from processing multiple items simultaneously.

It is not important to remember “conjunction” and “disjunction”; the most important part is: the more instructions or steps you add to the prompt, the more thinking tokens you will need. It's quite intuitive, no surprise here.

What does this mean for daily prompting?

First of all, thinking is not free. Asking for a train of thought (or using “reasoning mode”) consumes tokens, time, and often the intuitive advantage of the model. Secondly, not all tasks require it. Simple classification, direct search, and pure matching often work best with minimal scaffolding: “answer in one line”, “choose A or B”, “return ID”, “choose closest example”. Save step by step for problems that really have a lot of constraints or multi-step dependencies: data transformations, multi-hop inference, policy checking, tool choreography, and long statements with delicate edge cases.

A rule of thumb I've adopted since this conversation:

- If the task is mainly about recognition, retrieval or matchingprefer quick mode: concise suggestions, no forced explanations, clear output restrictions.

- If the task is compose, transform or verify against several conditionsenable thoughtful reasoning by asking the model to plan (briefly) before responding or by breaking down the workflow into clear steps.

The exchange is the same as in this lecture hall. Bypassing considerations may be quicker and often the right thing to do, but you lose certainty and justification. Adding consideration provides traceable logic and improved reliability in the face of complexity, at the cost of latency (and sometimes creativity). Consider when you will incur this cost.

Clearer Mind Checklist (No Exaggeration)

- Does success follow a pattern? Use fast mode.

- Are there many ANDs to fulfill? Use purposeful mode.

- Do I need justification for auditability? Ask for a short, structured explanation – after the answer, not before.

- Am I adding instructions just to feel safer? Delete them and test again.

I still like my DFA instincts. They are great when accuracy is important. But that day, the roaring crowd had the right intuition: sometimes the best hint is the shortest one, and the best “reasoning” is no reasoning at all.

Key takeaway

The next time you turn on think mode in your favorite LLM application and forget it's still on, remember: the model may perform better in no-think mode, depending on the task. We humans are already quite good at thinking quickly and naturally perform this phase effortlessly. We should usually use LLM for tasks that require reasoning. Still, it's worth asking the LLM for intuitive ideas you may not have considered.

PS If you have a general idea of this post, that's enough – you don't have to think about it!

https://arxiv.org/pdf/2503.13401

Published via Towards AI