Author: Mishtert T

Originally published in the direction of artificial intelligence.

How Someone who spent countless hours of debugging the code, which at first glance seemed perfect, learned that AI coding tools can be both a blessing and a curse.

The question is not whether these tools make us faster; Yes.

The real question is whether they improve us.

The cursor and github Copilot are two examples of contemporary AI encoding aids that can significantly reduce development cycles. However, empirical research shows that 25-50% of the initial AI suggestions contains functional or security defects.

Achieving code for the first time (ftr), the code that compiles, transfers tests, meets standards and is ready for the production of the first approval, requires disciplined practices that go beyond only by accepting AI results.

This technical textbook, written from my perspective, translates research results and experience for habits, handrails and automation patterns. These elements ensure that each engineer consistently sends the FTR code, maintaining performance.

Why is it important for the first time

For the first time it emerged from production floors, where discovering defects after production meant scrapping the entire batch or expensive withdrawal. But wait, is the software really the same? I think the software may be worse.

In my experience, one overlooked vulnerability may persist for years. Production defects affect individual products, but code disadvantages can affect any user, any transaction, indefinitely.

Let me break why it is so important in practice:

- Debugging cycles destroy the shoot: I was there – You are in a state of flow, and then bum, you go back to the code you wrote last week. Studies show that breaks use 30 to 45 minutes to repair, but To be honest, it seems conservative When you consider switching a mental context

- Safety holes are connected over time: It really keeps me at night. Production defects are contained, but the gaps in the code? Studies reveal that almost half of the Code Fragments generated AI contains weaknesses listed on the CWE list

- The erosion of trust creates team friction: I saw how this happens first hand. When the AI suggestions consistently fail, developers begin to guess everything. Overloading the pipeline increases injury; Everyone failed to steal another 12 minutes from the team's flow

I have to explain something important. FTR is not about achieving perfection during the first attempt. It would be impossible and to be honest, paralyzing. It is about establishing processes that include problems from escaping to production.

Hidden cost of the code “good enough”

The team member used Github Copilot to generate authentication function. The code compiled, looked professional and passed basic tests. Two weeks later, it was discovered that he had a susceptibility that may disclose user data. 15 minutes saved during coding stretched to 6 hours of emergency patching.

This pattern is repeated in the industry. This is not a small problem; This is a systematic problem that requires systematic solutions.

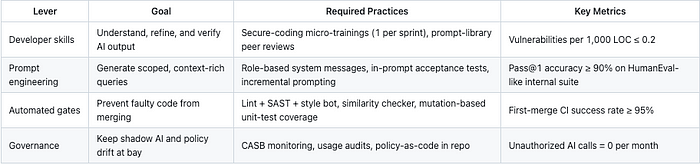

Right anatomy for the first time in reinforced working flows ai-

My experience with artificial intelligence coding tools taught me that success requires discipline, not just better hints. This is only partly true. Better monitors enormously, but they are only one piece of the puzzle.

The most effective approach I found connects four elements:

- Hint: Submit complex functions for the increase in line 15-20, and not ask for full functions

- A mandatory explanation: Never commit a code generated by AI until you explain its logical and edge cases

- Automated verification: Use tools for static analysis and mutation tests to do problems before reviewing people

- Iterative improvement: Ask AI to explain his code and then optimize based on this explanation

Teams that are successful thanks to artificial intelligence coding tools, treat them as younger programmers who need supervision, not as super codes generators.

1. Precise jumping engineering 🎯

1.1 accepts system messages anchored for roles

Start each Copilot chat or cursor session with reusable system monitor, which enforces organizational conventions:

You are a Senior <LANG> engineer in <ORG>.

Generate secure, idiomatic, unit-tested code that follows <STYLE_GUIDE_URL>.

Never expose secrets; prefer dependency injection; include docstring and

pytest-style tests.

Microsoft's internal study shows that the explicit role framework increases the correct response indicator by 18-24 points.

1.2 Distribution of work for atomic hints

Large “one -time” feature of the Hallucinations and Dryf style. The QODO 2025 survey showed that 65% of low quality response results from missing contexts.

Transfer the functions to 15-20 line growths:

- Stup signature: Define functions, types, docsting skeleton.

- Implementation prompt: Signature, submitted comments of branches.

- Test: Ask parameterized unit tests that enforce edge cases.

1.3 Tips for the acceptance test

Attach scenarios or examples in the Gherkin style directly in the poem:

# Examples

# add(2,3) -> 5

# add(-4,4) -> 0

LLM conditioned with an example of hints reach up to 1.9% higher first transition (application@1) and 74.8% less tokens than COT on Humaneval

1.4 iterative improvement loop

After the initial generation:

- Explain: Ask the model to paraphrase his code to extend hidden disadvantages.

- Force: Ask for optimization (“O (n) not about (n²)”) or exacerbation of security.

- Regenerate: Limit iterations to ≤3 to avoid decreasing profits and waste.

2. Discipline of programmers verification 🔍

2.1 Principle “Explain before acceptance”

No suggestion should be committed until the developer is able to orally explain his flow and edge cases during the description of PR.

This improves the gaps in understanding emphasized in empirical studies, in which 44% of the rejected AI code failed because of vague intentions.

2.2 A must -have triad of a triple test

You combine your blocks with any failure, ensuring that damage is caused before the inspection.

2.3 Library of a safe fragment after securing

Keep the internal, proven repository of fragments (Patterns for authorization, access to DB, error service). Developers can refer to them in hints.

Use company::auth::jwt.verify_token() for user validation.

Re-use of a fragment reduced the susceptibility density by 38% in a 6-month-old pride pilot

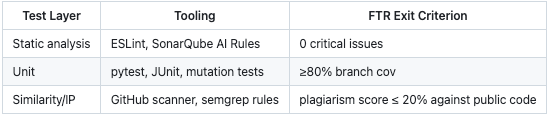

3. Automatized quality gates 🚪

3.1 Subject hook AI-Tagging

Git hook tags lines generated by Kopiot (`# AI -Gen`) through DIFF heuristics. Then the SAST pipeline uses more severe thresholds to marked regions (e.g. high detachment CWE blockers).

3.2 Policy as a code

Store YAML principles in `/ai-politics/`.

ai:

allow_public_code_match: false

require_tests: true

secret_scans: true

The pipelines quickly fail to violate politics, enforcing uniform management among repositories.

3.3 Smart CI Parallymism

To save you minutes, start with light pads and stop the tasks if they don't work. This enables large companies to recover up to 19% of the pipeline time.

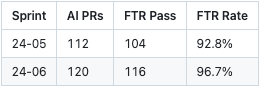

4. Continuous feedback and learning 🔄

4.1 Metrics of the characters PR

Tag connected PR with `AI-FTR-PASS or “AI-Ftr-Fail`. Weekly scripts are aggregating:

Trendic District District Spotlight Tunts requiring coaching.

4.2 Telemetrical review Chatbot

To improve fast templates, aggregate fast completion pairs, anonymize them and rate once a month. Google Cloud Research shows that the quick treatment raises the future use of tokens by 31%

4.3 Round Master's tables

Nominate one “AI master“Per squad. Monthly sessions divide failures and corrections (e.g. hacks of context -sized, better test hints). Peer learning improved perceived code quality by 81% in case of admission.

5. Fast reference control list for engineers ✅

- Use/Refer to the approved line of land -based system.

- Limit the fast range to ≤20 LOC target.

- Attach examples and edge cases.

- Ask the model to explain and optimize the prompts.

- Add or accept only if the explanation is clear.

- Make sure that the unit tests are generated and passed locally.

- Press; Let you confirm Sast, coverage and similarity.

- Review PR Diff; Tag reviewer on blocks generated by AI.

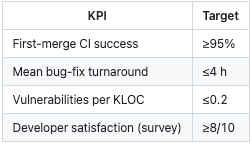

6. Measuring success 📏

Final thoughts

Let me be completely honest in this matter. The implementation of FTR practices requires investment in advance. Teams must establish new work flows, train programmers in fast engineering and configure automated quality gates.

Some organizations may resist this additional general cost. But the alternative to continue the treatment of the code generated by AI as immediate ready production is ultimately more expensive and more risky.

Because AI tools are becoming more sophisticated and widely accepted, the volume of potentially problematic code is growing exponentially.

The solution is not to abandon AI coding tools. He is to use them more intelligently. For the first time, the right wings provide a framework for doing exactly this, using AI speed while maintaining human and quality standards.

The goal is not to replace human judgment with AI performance. This is to increase human abilities with the help of AI, creating a development process that is both faster and more reliable than just one approach.

About the author:

Transformation and architect AI Management lawyer Mediator and arbitrator

Analytic and transformation leader with over 24 years of experience, advising changes, risk management and including technology, while emphasizing corporate order. As a mentor, Mishtert helps organizations at an excellent level among evolving landscapes.

Published via AI