Last updated: October 18, 2025 by the editorial team

Author's): Hira Ahmad

Originally published in Towards Artificial Intelligence.

Less is more: recursive inference in small networks (paper review)

Modern artificial intelligence often chases scale: deeper layers, more attention and billions of parameters. But beneath this breed lies a quieter revolution: recursive reasoning, the idea that a model can improve its own thoughts, not by getting bigger, but by thinking again.

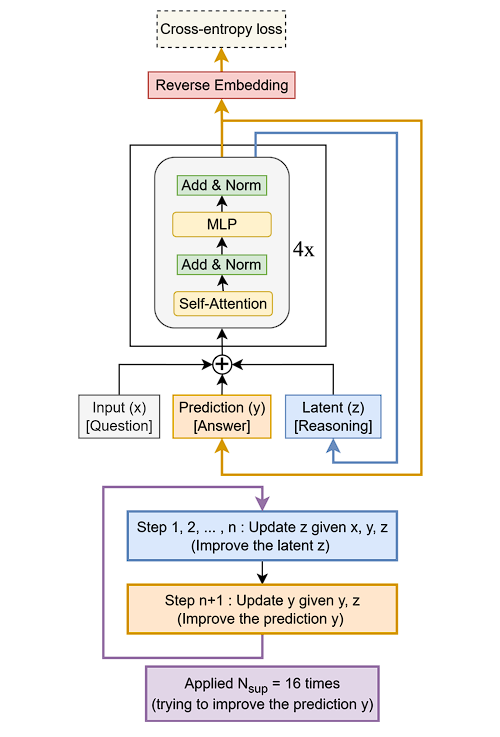

The paper examines two innovative AI architectures, hierarchical reasoning models (HRM) and small recursion models (TRM), highlighting how both approaches address the challenges of scale in AI reasoning. HRM attempts to simulate depth through its dual-net structure, while TRM combines the advantages of recursion and a simplified design, enabling complex tasks to be performed with fewer parameters and greater efficiency. The author emphasizes the importance of adaptive computing and deep supervision in achieving solid reasoning without excessive computational burden, postulating a shift towards recursion as a means of increasing the cognitive capabilities of artificial intelligence.

Read the entire blog for free on Medium.

Published via Towards AI

Take our 90+ year old Beginner to Advanced LLM Developer Certification: From project selection to implementing a working product, this is the most comprehensive and practical LLM course on the market!

Towards AI has published 'Building an LLM for Manufacturing' – our 470+ page guide to mastering the LLM with practical projects and expert insights!

Discover your dream career in AI with AI Jobs

Towards AI has created a job board tailored specifically to machine learning and data analytics jobs and skills. Our software finds current AI tasks every hour, tags them and categorizes them so they can be easily searched. Explore over 40,000 live job opportunities with Towards AI Jobs today!

Note: The content contains the views of the authors and not Towards AI.

:max_bytes(150000):strip_icc()/terms_a_artificial-intelligence-ai_asp-FINAL-ddba8ac599f3438d8064350d2ee1ae5a.jpg?w=100&resize=100,70&ssl=1)