Scientists from the University of Georgia and Massachusetts General Hospital (MGH) have developed a specialized language model, RadiologyLlama-70Bto analyze and generate radiological reports. The model, built on Llam 3-70B, is trained in the field of extensive sets of medical data and provides impressive performance in the processing of radiological results.

Context and meaning

Radiological tests are the cornerstone of the diagnosis of the disease, but the growing volume of imaging data causes a significant burden on radiologists. AI can alleviate this load, improving both performance and diagnostic accuracy. Radiologyllam-70B means a key step towards AI integration with clinical work flows, enabling improved analysis and interpretation of radiological reports.

Training and preparation

The model was trained in a database containing over 6.5 million medical reports of patients with MGH, covering the years 2008–2018. According to scientists, these comprehensive reports include various imaging methods and anatomical areas, including CT, MRI scans, X -rays and fluoroscopic imaging.

The data set includes:

- Detailed radiologist observations (results)

- Final impressions

- Research codes indicating imaging techniques such as CT, MRI and X -rays

After thorough initial processing and de-identification, the final set of training consisted of 4 354 321 reports, with additional 2114 test reports. Strict cleaning methods were used, such as removing incorrect records to reduce the likelihood of “hallucinations” (incorrect outputs).

The most important technical information

The model has been trained using two approaches:

- Full tuning: Adjusting all model parameters.

- Qlora: The low ranking adaptation method with 4-bit quantization, increasing calculation efficiency.

Training infrastructure

The training process used the 8 NVIDIA H100 GPU group and included:

- Mixed training (BF16)

- Gradient control points for memory optimization

- Deepspeed zero stage 3 for dispersed learning

Performance results

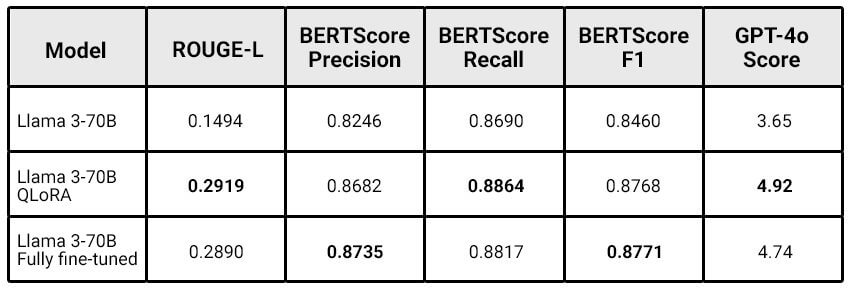

Radiologyllam-70B has significantly exceeded its basic model (LAMA 3-70B):

Qlora turned out to be highly efficient, providing comparable results to full tuning at lower calculation costs. Scientists noticed: “The greater the model, the more benefits the Qlora's refinement can get.”

Limitations

The study recognizes some challenges:

- No direct comparison with previous models such as

Radiology-llama2. - The latest versions of LAMA 3.1 were not used.

- The model can still show “hallucinations”, which makes it inappropriate for the fully autonomous generation of reports.

Future directions

The research team plans:

- Train the model for LAMA 3.1-70B and explore versions with 405B parameters.

- Share preliminary data for language models.

- Develop tools for detecting “hallucinations” in generated reports.

- Expand assessment indicators for clinically significant criteria.

Application

RadiologyLlama-70B It is significant progress in the application of artificial intelligence to radiology. Although it is not ready for full autonomous use, the model shows huge potential to improve the work of radiologists, ensuring more accurate and appropriate results. The study emphasizes the potential of approaches such as Qlora for training specialized models for medical applications, paving the way to further innovations in artificial health care intelligence.

For more information, check Full ARXIV test.