Last updated: October 11, 2025 by the editorial team

Author's): MKSave here

Originally published in Towards Artificial Intelligence.

New training method teaches language models to generate reasoning strategies first, improving accuracy by 44% on complex math problems

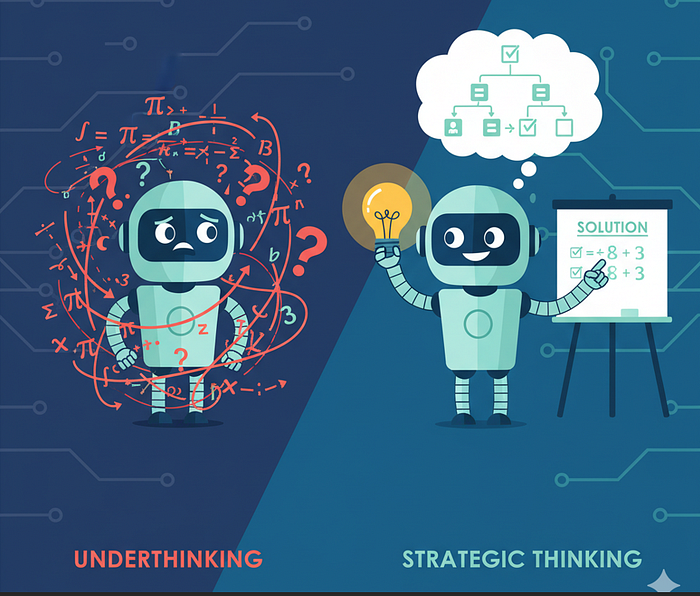

Large language models suffer from a specific problem: they optimize to generate longer solutions, rather than exploring different problem-solving strategies. Scientists call this “reasoning.”

In this article, the authors discuss the limitations of large language models in problem solving, in particular their tendency to favor long-term solutions over strategic exploration. Introducing RLAD (reinforcement learning for abstraction discovery), they describe its effectiveness in training AI systems to first generate high-level reasoning strategies, resulting in a noticeable 44% performance increase on math tests. The paper also examines the fundamental principles of abstract reasoning, the dual training process associated with RLAD, and its implications for enhancing the metacognitive capabilities of artificial intelligence in various domains.

Read the entire blog for free on Medium.

Published via Towards AI

Take our 90+ year old Beginner to Advanced LLM Developer Certification: From project selection to implementing a working product, this is the most comprehensive and practical LLM course on the market!

Towards AI has published 'Building an LLM for Manufacturing' – our 470+ page guide to mastering the LLM with practical projects and expert insights!

Discover your dream career in AI with AI Jobs

Towards AI has created a job board tailored specifically to machine learning and data analytics jobs and skills. Our software finds current AI tasks every hour, tags them and categorizes them so they can be easily searched. Explore over 40,000 live job opportunities with Towards AI Jobs today!

Note: The content contains the views of the authors and not Towards AI.