Robotic Transformer 2 (RT-2) is an innovative model with a vision language (VLA), which learns both on the basis of internet data and robotics, and translates this knowledge into generalized robotic control instructions

Models in a vision language (VLM) with large capacity are trained in the field of data sets on an internet scale, thanks to which these systems are extremely good in recognizing visual or language patterns and operating in different languages. But for robots to reach a similar level of competence, they would have to collect a given robot, first -hand, in every object, environment, task and situation.

In ours paperWe introduce a robotic transformer 2 (RT-2), an innovative model with a vision (VLA), which learns both on the basis of internet data and robotics, and translates this knowledge into generalized robotic control instructions, maintaining possibilities on a network scale.

The visual model (VLM) initially trained on data on an online scale learns on the basis of Robotics RT-1 data to become RT-2, a model of visual activities (VLA) that can control the robot.

This work is based on a robotic transformer 1 (RT-1)The model trained in the field of demonstration of many tasks, which can learn to combine tasks and objects visible in robotic data. More precisely, our work uses the RT-1 robot demonstration data, which were collected with 13 robots in 17 months in an office kitchen environment.

RT-2 shows better generalization possibilities as well as semantic and visual understanding going beyond the robotic data to which it was exposed. This includes the interpretation of new commands and responding to users' instructions by performing basic reasoning, such as reasoning with objects categories or high level descriptions.

We also show that the inclusion of thinking of thoughts allows RT-2 to perform multi-stage semantic reasoning, such as deciding which object can be used as an improvised hammer (rock) or what type of drink is best for a tired person (energy drink).

Adaptation of VLM to robotic control

RT-2 is based on VLM, which take one or more images as input data and produces a sequence of tokens that conventional represent the text of the natural language. Such vlm were successfully trained On internet scale data to perform tasks, such as visual answers to questions, image signature or recognition of objects. In our work, we adapt language paths and image model (Palo-X) and the personified model of path language (Palm-e) act as the RT-2 spine.

To control the robot, it must be trained for effort. We respond to this challenge, representing actions as tokens in the model output – similar to language tokens – and we describe actions as strings that can be processed according to the standard natural language tokenizersshown here:

Representation of the series of action used in RT-2 training. An example of such a string may be the sequence of the number of robot tokens, e.g. “1 128 91 241 5 101 127 217”.

The string begins with a flag, which indicates whether to continue or finish the current episode, without following subsequent commands, and follows the instructions to change the position and turnover of the end effector, as well as the desired extension of the robot gripping.

We use the same discreet version of robot activities as in RT-1, and we show that the transformation of it into a chain representation enables training VLM models on robotic data-input and output spaces of such models do not have to be changed.

Architecture and RT-2 training: We work a pre-trained VLM model on robotics and internet data. The resulting model takes images of the robot camera and directly predicts the robot's actions.

Generalization and outgoing skills

We have conducted a series of qualitative and quantitative experiments in our RT-2 models, on over 6,000 robotic studies. By studying the emerging RT-2 capabilities, we first searched the tasks that would require a combination of knowledge from data on the internet scale and the experience of the robot, and then defined three categories of skills: understanding symbols, reasoning and recognition of people.

Each task required understanding of visual-semantic concepts and the possibility of robotic control to act for these concepts. Commands such as “lift a bag to fall off the table” or “move the banana to the sum of two plus one”-where the robot is asked to perform the task of manipulation on objects or scenarios that was never seen in robotic data-the knowledge of knowledge translated from data based on data.

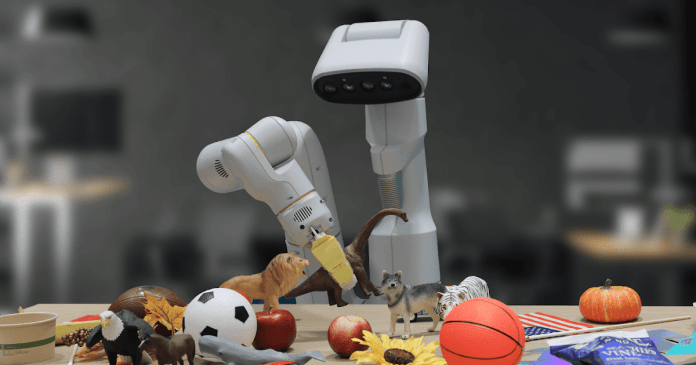

Examples of emerging robotic skills that are not present in given robotics and require the transfer of knowledge from initial training.

In all categories, we have observed increased generalization efficiency (over 3x improvement) compared to previous base lines, such as previous RT-1 models, such as visual bark (VC-1), which have been initially trained on large visual data sets.

The success of the success of emerging skill assessments: Our RT-2 models exceed both the earlier Base Lines Transformer Robotics (RT-1) and Visual (VC-1).

We also conducted a series of quantitative assessments, starting with the original RT-1 tasks, for which we have examples in given robots, and continued with various degrees of previously invisible objects, background and environments by the robot, which required work to learn generalization as a result of vlm effort.

Examples of previously invisible environments by a robot in which RT-2 generalizes to new situations.

RT-2 has retained the performance of the original tasks visible in the robot and improvement of the performance of previously invisible scenarios by the robot, from 32% to 62% RT-1, which shows a significant benefit of large training.

In addition, we have observed a significant improvement compared to the basic lines pre-trained in visual tasks, such as VC-1 and reusable representations in the field of robotic manipulation (R3m) and algorithms using VLM to identify objects, such as manipulation of open world objects (MOO).

The RT-2 achieves high performance in distribution tasks and exceeds many base lines regarding invisible tasks outside the distribution.

Assessment of our model on Open Source TABLE TABLE The robotic task package has achieved a 90% success rate in the simulation, which is significantly improving compared to previous base lines, including BC-Z (72%), RT-1 (74%) i LAVA (77%).

Then we evaluated the same model in the real world (because it was trained in simulation and real data) and showed its ability to generalize on new objects, as shown below, where none of the objects except the blue cube was present in the set of training data.

RT-2 works well on the tasks of a real job language table. None of the objects except blue cubes was present in the training data.

Inspired Chain monitor methods used in LLMSWe examined our models to combine robotic control with chain reasoning to allow you to learn long -term planning and low level skills within one model.

In particular, we have refined the RT-2 variant for only a few hundred stages of the gradient to increase its ability to use language and actions together. Then we expanded the data with an additional step of the “plan”, first describing the purpose of the action that the robot intends to take in natural language, and then “action” and tokens of action. Here we show an example of such reasoning and the resulting behavior of the robot:

The chain reasoning enables the learning of an independent model that can both plan long -term skills sequences and predict the actions of the robot.

Thanks to this process, RT-2 can follow more involved commands that require reasoning with indirect steps needed to perform the user's instructions. Thanks to the VLM RT-2 skeleton, it can also plan both image and text commands, enabling visually grounded planning, while current approaches to the plan and act, such as Saycan He can't see the real world and rely completely on the language.

Proceedings of robotic control

RT-2 shows that models in vision (VLMS) can be transformed into powerful models with vision language (VLA), which can directly control the robot by combining pre-vlm training with robot data.

Thanks to two VLA instances based on Palm-E and Pal-X, RT-2, it results in a highly improved robotic policy, and more importantly, leads to much better generalization efficiency and the abilities of appearing, inherited from a preliminary vision training on a network scale.

RT-2 is not only a simple and effective modification in relation to existing VLM models, but also shows the promise of building a physical physical robot, which can reason, solve the problem and interpret information about the performance of a varied range of tasks in the real world.

Thanks

We would like to thank the co -authors of this work: Anthony Brohan, Noah Brown, Justice Carbajal, Yevgen Chebotar, Xi Chen, Krzysztof Choromanski, Tianli Ding, Danny Driess, Avinava Dubey, Chelsea Finn, Pete Florence, Chutsuan Fu, Montse, Keerthana, Keerthana, Keerthana Gopalakrishnan, Kehang Han, Karol Hausman, Alexander Herzog, Jasmine Hsu, Brian Ichter, Alex Irpan, Nikhil Joshi, Ryan Julian, Dmitry Kalashnikov, Yuheng Kuang, Isabel Leal, Lisa Lee, Tsang-Wei Edward Karl Pertsch, Kanishka Rao, Krista Reymann, Michael Ryoo, Grecia Salazar, Pannag Sanketi, Pierre Sermat, Jaspiar Singh, Anikait Singh, Radu Soricut, Huong Tran, Vincennt Vanhoucke, Quan Vuong, Ayzaan Wahid, Stefan, Stefan, Stefan, Stefan, Stefan, Stefan, Stefan, Stefan, Stefan, Stefan, Stefan, Stefan Stefan, Stefan, Stefan, Stefan, Stefan, Stefan, Stefan, Stefan, Stefan, Stefan, Stefan, Stefan, Stefan, Stefan, Stefan, Stefan, Stefan, Stefan, Stefan. Wohlhart, Jialin Wu, Fei Xia, Ted Xiao, Peng XU, Sichun Xu, Tianhe Yu and Brianna Zitkovich for their contribution to the design and Fred alcohol, Jodi Lynn Andres, Carolina Parada, Joseph Dabis, Rochelle Delaz, Jessica Gomez, Gavin Gonzas, John Gulyard, Tomas, Tom Tomas, Tomas, Tomas, Tomas. Jackson, Jie Tan, Scott Lehrer, Dee M, Utsav Malla, Sarah Nguyen, Jane Park, Emily Perez, Elio Prado, Jornell Quiambao, Clayton Tan, Jodexty Therlonge, Eleanor Tomlinson, Wenxuan Zhou and Greater Deepmind for their help.