Author's): M

Originally published in Towards Artificial Intelligence.

From the 20:1 rule of chinchillas to the 3700:1 ratio of SmolLM3: how the economics of inference changed the training manual

Training a language model is expensive. Really expensive. A single training run for a model with 70 billion parameters can cost millions of dollars in computation.

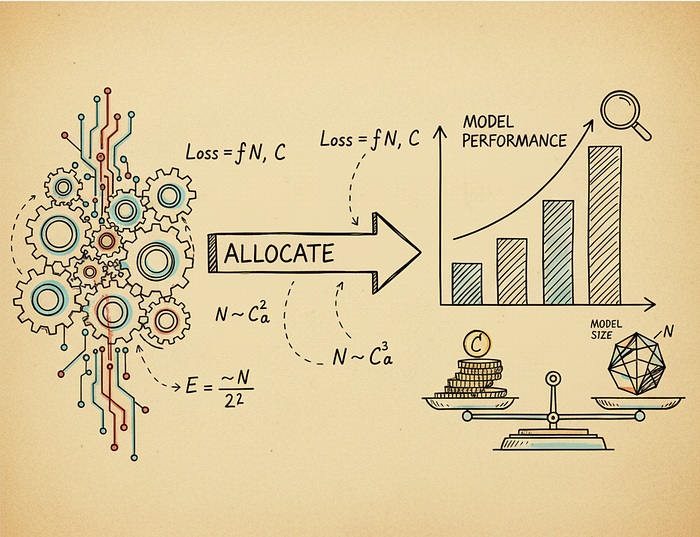

This article discusses the concept of scaling laws in training language models, highlighting the importance of balancing model size, training data, and computational budget. It discusses findings from DeepMind's Chinchilla study, which found that models should be equally scaled in size and data for optimal performance. By following these empirical guidelines, practitioners can achieve significant improvements in model performance and effectiveness, ultimately leading to better language models, while addressing key trade-offs between training and inference costs.

Read the entire blog for free on Medium.

Published via Towards AI

Take our 90+ year old Beginner to Advanced LLM Developer Certification: From project selection to implementing a working product, this is the most comprehensive and practical LLM course on the market!

Towards AI has published 'Building an LLM for Manufacturing' – our 470+ page guide to mastering the LLM with practical projects and expert insights!

Discover your dream career in AI with AI Jobs

Towards AI has created a job board tailored specifically to machine learning and data analytics jobs and skills. Our software finds current AI tasks every hour, tags them and categorizes them so they can be easily searched. Explore over 40,000 live job opportunities with Towards AI Jobs today!

Note: The content contains the views of the authors and not Towards AI.