Deepnash learns to play a loss from scratch, combining game theory and without modeling deep RL

Artificial intelligence systems (AI) games in games have advanced to the new border. A lossy, classic board game, which is more complex than chess and thunder, and more craft than poker, has been controlled. Published in sciencewe present DeepnashAI agent, who learned playing from scratch to the human level of experts, playing against himself.

Deepnash uses a new approach based on game theory and without modeling deep reinforcement learning. His style of the game coincides with Nash, which means that his game is very difficult to use the opponent. It is so hard that Deepnash reached the highest three ranking among people among human experts on the world's largest Strate's platform, Gravon.

Historically, board games were a measure of progress in the field of artificial intelligence, which allows us to examine how people and machines develop and perform strategies in a controlled environment. Unlike chess and loss, it is a game of imperfect information: players cannot directly observe the identity of the opponent's works.

This complexity meant that other loss systems based on artificial intelligence fought for going beyond the amateur level. It also means that a very successful AI technique called “Game Tree Search”, previously used to master many perfect information games, is not enough to be scalable for a loss. For this reason, Deepnash goes completely beyond the search for game trees.

The value of strateful mastering goes beyond the game. In the pursuit of our intelligence mission to develop human science and benefits, we must build advanced AI systems that can operate in complex, real situations with limited information of other agents and people. Our article shows how Deepnash can be used in situations of uncertainty and effectively balancing the results to help solve complex problems.

Cognition of a lossy

Strate is a turn -based game, an intercepted game. It is a bluff and tactic game, gathering information and subtle maneuvering. It is a game of zero sum, so each one player's profit is a loss of the same size for his opponent.

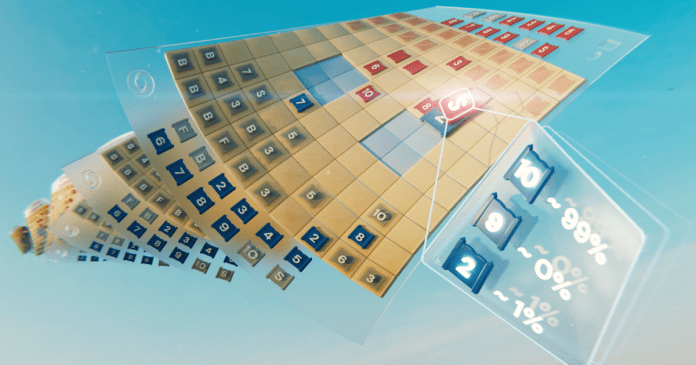

Strate is a challenge for artificial intelligence, because it is a game of imperfect information. Both players start by organizing 40 pieces of play in any initial formation, initially hidden from each other during the game. Because both players do not have access to the same knowledge, they must balance all possible results when making decisions – being a difficult reference point for studying strategic interactions. Types of elements and their rankings are shown below.

Left: Pieces of pieces. In the battles, they will win higher elements, except for 10 (marshal) loses after a spy attack, and the bombs always win, except for the capture by Górnik.

Middle: Possible initial creation. Note how the flag is safely hidden at the back, surrounded by protective bombs. Two light blue areas are “lakes” and are never introduced.

Normal: He plays in the game, showing that Blue's Spy captures Red's 10.

Information is difficult to win in a loss. The identity of the opponent's work is usually revealed only if he meets the second player on the battlefield. This is a clear contrast to the games of excellent information, such as chess or go, in which the location and identity of each element are known to both players.

Approaches to machine learning, which work so well in excellent information games, such as Alphazero Deepmind, are not easily transferred to a loss. The need to make decisions with imperfect information and the potential of the bluff makes it more similar to the poker Texas Hold'em and requires the human ability once noticed by American writer Jacek London: “Life is not always a matter of keeping good cards, but sometimes a well -playing hand.”

AI techniques that work so well in games such as Texas Hold'em, but do not move to a lossy, due to the length of the game – often hundreds of movements before the player wins. The reasoning in a loss should be performed in relation to a large number of sequential actions without obvious insight into how each action contributes to the final result.

Finally, the number of possible game states (expressed as “complexity of the game tree”) is outside the letter compared to chess, Go and Poker, which makes it extremely difficult to solve. It was it that excited us of the loss and why it was a ten -year challenge for the AI community.

The scale of differences between chess, poker, him and lossy.

Balance search

Deepnash uses a new approach based on a combination of game theory and without modeling deep reinforcement learning. “Without modeling” means that Deepnash is not clearly trying to model the opponent's private status during the game. In particular, in the early stages of the game, when Deepnash does not know much about the opponent's works, such modeling would be ineffective, if not impossible.

And because the complexity of lossy games is so huge, Deepnash cannot apply a strong approach to games based on artificial intelligence-sewing of Monte Carlo trees. Searching trees was a key component of many breakthrough achievements in artificial intelligence for less complex board games and poker.

Instead, Deepnash is powered by a new algorithmic game-theoretical idea, which we call regular Nash (R-Nad) dynamics. Working on an unparalleled scale, R-NAD directs Deepnash's behavior towards the so-called Nash balance (immerse yourself in technical details in Our paper).

The behavior of the game, which results in Nash's balance, is inefficient over time. If a person or machine played a completely necessary loss, the worst indicator of winnings they could achieve would be 50%and only if they face a similarly perfect opponent.

In matches with the best bots of Strate – including several winners of the Strate Strate World Championships – the Deepnash winner exceeded 97%and often was 100%. Compared to the best experienced players on the Gravon Games Deepnash platform, he reached a winner of 84%, winning the highest three place.

Expect an unexpected

To achieve these results, Deepnash showed some unusual behaviors both during the initial phase of implementing pieces and in the gameplay phase. To become difficult to use, Deepnash has developed an unpredictable strategy. This means that creating initial implementation is diverse enough to prevent the detection patterns of opponents in a number of games. And during the game phase, Deepnash is randomized between seemingly equivalent activities preventing the use of tendencies.

Strate players are trying to be unpredictable, so they have a value in hiding information. Deepnash shows how he values information in a rather striking way. In the example below, against the human player, Deepnash (blue) devoted, among others, 7 (large) and 8 (colonel) at the beginning of the game, as a result he was able to locate 10 (marshal), 9 (general), 8 and two 7.

In this early situation, Deepnash (Blue) has already located many of the most powerful elements of the opponent, secretly keeping his key elements.

Deepnash left these efforts in significant material defects; He lost 7 and 8, while his human opponent retained all his songs 7 and more. Nevertheless, having a solid Intel on the best brass of the opponent, Deepnash assessed his winning chances of 70% – and won.

Bluff

Like in poker, a good stratey player must sometimes represent strength, even when he is weak. Deepnash has learned many such tactics of bluffing. In the example below, Deepnash uses 2 (poor scout, unknown opponent) as if he was high rank, realizing the known opponent 8. The human opponent decides that the chaser is most likely 10, and therefore tries to lure him in an ambush with a spy. This Deepnash tactic, risking only a small piece, was able to rinse and eliminate the enemy's spy, a critical song.

Human Player (red) is convinced that the unknown piece chasing them 8 must be Deepnash 10 (Note: Deepnash has only lost 9).

See more, watching these four movies with full -size games raised by Deepnash Against (anonymous) Human Experts: Game 1IN Game 2IN Game 3IN Game 4.

“

I was surprised by the level of Deepnash. I have never heard of an artificial stratey player who approached the level needed to win the match with an experienced human player. But after playing against Deepnash, I was not surprised by the best first rankings that later reached the Gravon platform. I expect it to advise it very well if it allowed to participate in the world championships in people.

Vincent de Boer, co -author of paper and former Strate World Champion

Future directions

While we have developed Deepnash for the highly defined loss world, our innovative R-NAD method can be directly applied to other zero games with a total of double both ideal and imperfect information. R-NAD can generalize far beyond bilateral game settings to solve large-scale problems, which are often characterized by imperfect information and spaces of the astronomical state.

We also hope that R-NAD can help unlock new applications of AI in domains, which contain a large number of human participants or artificial intelligence with different purposes that may not have information about the intentions of others or what occurs in their environment, for example in a large optimization of traffic management in order to shorten the driver's travel time and related to them.

By creating a general AI system, which is solid in the face of uncertainty, we hope to introduce the possibilities of solving AI problems to our nature unpredictable world.

Learn more about Deepnash, reading Our article in science.

For scientists interested in trying R or work with our newly proposed method, we have the opening of sources Our code.

Authors of paper

Julien Perlatat, Bart of the Wilder, Daniel, Eugenes from Tarassov, Florian Strub, Vincent from Boer, Paul Muller, Jerome T Thomas, Stephen Mcaler, Stephen Mcalerer, Sarah H Cena, Zhe Wang. Khan, Ozamber is fine and. Hassabis, Charles Tuyls.