Author(s): Towards AI Editorial Team

Originally published on Towards AI.

What happened this week in AI by Louie

DeepSeek has been relatively quiet this year after a series of huge innovations in 2024 culminated in it breaking into mainstream awareness in early 2025. While DeepSeek’s next-generation LLM has yet to be released, the lab is clearly working on foundational research that may well shape it. This week, they released DeepSeek-OCR, a model that is both a powerful tool for document processing and a fascinating proof-of-concept for a new way of handling context in LLMs. The core idea is simple: instead of feeding an LLM text, you feed it an image of that text.

This approach, which they call “contexts optical compression,” explores pushing the limits of how efficiently textual information can be represented using vision tokens instead of text tokens. The results are compelling. DeepSeek-OCR can achieve a compression ratio of nearly 10-to-1 — ten text tokens compressed into a single vision token — while maintaining an OCR decoding precision of around 97%. Even at an aggressive 20x compression, the accuracy remains 60% (still usable in some contexts). The model supports tasks such as converting documents to Markdown, parsing figures, and object localization, and it’s open-sourced under the MIT license, with integrations for vLLM and Hugging Face Transformers.

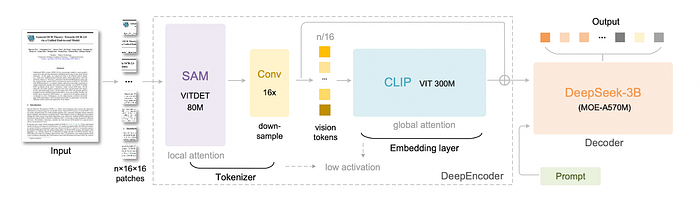

This is all accomplished with a custom DeepEncoder and a 3B Mixture-of-Experts decoder. DeepEncoder is the core of DeepSeek-OCR, comprising three components: a SAM for perception, dominated by window attention; a CLIP for knowledge, with dense global attention; and a 16× token compressor that bridges between them. Its optical compression technique is the standout innovation: it maps text into a 2D visual format, exploiting redundancies within image patches (e.g., similar fonts or layouts) to achieve higher semantic density per token than linear text sequences.

On benchmarks, the model outperforms some established efficiency-optimized OCR systems like GOT-OCR2.0 while using less than half the number of tokens. In production, its efficiency is formidable, capable of processing over 200,000 pages per day on a single A100 GPU. This makes it a powerful new option for high-volume OCR, though its real significance lies in the questions it raises about LLM architecture and long-context system design. One of the biggest challenges for LLMs remains the quadratic scaling of attention, which makes processing very long contexts computationally expensive. DeepSeek’s work suggests that by converting text to a more compact visual format, we could dramatically shrink the input sequence length and make long-context processing far more efficient.

The paper even speculates on how this system could be used to create more sophisticated memory mechanisms. By rendering older parts of a conversation or document as progressively smaller, blurrier images, you could create a form of memory decay that mimics human forgetting, where recent information is held in high fidelity and older information fades while consuming fewer resources. This is a novel blueprint for how LLMs might one day manage theoretically unlimited context without needing infinite compute.

Why should you care?

One important takeaway here is that DeepSeek-OCR might be a Trojan horse for a new kind of LLM architecture. As Andrej Karpathy noted, this research challenges the primacy of text tokens. He suggested that a future in which all LLM inputs are treated as images might be more efficient, leveraging the higher information density and bidirectional attention that vision processing enables.

This could help address some of the persistent issues with text tokenizers, including Unicode quirks and security vulnerabilities. This work is also a direct assault on the long-context bottleneck that currently limits LLMs. The ability to compress context by 10x or more without a major loss in fidelity could fundamentally change the economics of running these models. This is increasingly important in this era of LLM agents, where context windows can quickly escalate. For anyone building with AI, this opens up new possibilities. It is not a perfect solution, however. There is a clear trade-off between the level of compression and the final accuracy. For high-stakes work where every character must be perfect, more traditional or expensive models will likely remain the better choice.

Of course, this is still an early-stage exploration. However, DeepSeek has a track record of turning its research papers into powerful, production-ready models. This is a clear signal of their future direction and a fascinating glimpse into a future where a picture might just be worth 1,000 words.

— Louie Peters — Towards AI Co-founder and CEO

Hottest News

1. DeepSeek AI Has Unveiled DeepSeek-OCR

DeepSeek released a 3B-parameter, end-to-end OCR and document-parsing VLM that compresses long text into a small set of vision tokens via a DeepEncoder, then decodes with the DeepSeek3B-MoE-A570M language model. The system reports ~97% OCR precision at <10× compression and ~60% accuracy even at 20×, signaling strong efficiency for high-volume document workflows.

2. Google Expands Earth AI Capabilities

Google Maps is now integrated into the Gemini API, enabling developers to embed real-time geospatial data into applications. This feature enhances AI products with location-aware capabilities, providing detailed itinerary planning, personalized recommendations, and local answers. It combines structured data from Maps with timely context from Search, improving response quality significantly when both tools are used together.

3. Anthropic Launches Claude for Finance

Anthropic introduced Claude for Excel and new real-time data integrations to streamline financial analysis and modeling, expanding Claude’s role for teams using Microsoft tools; in a beta research preview, an Excel sidebar lets users chat with Claude to read, analyze, and modify workbooks with transparent change tracking, debug formulas, generate financial models, and build spreadsheets from scratch — initially rolling out to 1,000 testers across Max, Enterprise, and Teams tiers before wider availability.

4. Microsoft Open-Sourced SentinelStep

Microsoft researchers have unveiled SentinelStep, a mechanism that enables AI agents to listen, wait, and act for extended periods. Current AI agents can’t perform mundane time-dependent tasks, such as waiting for emails or monitoring prices. SentinelStep addresses this challenge by introducing dynamic polling and context handling, enabling AI agents to perform long-running tasks with high efficiency while minimizing memory usage and waste of compute resources. Integrated into Microsoft’s Magentic-UI research prototype, SentinelStep wraps agents with an ingenious workflow that decides when to execute, how often to poll, and when to exit. Early testing with SentinelBench, a recently developed test suite, reveals a significant improvement in reliability for longer tasks, 38.9% success for two-hour tasks versus 5.6% without it.

5. OpenAI Introduces ChatGPT Atlas

OpenAI has introduced ChatGPT Atlas, a new web browser that integrates ChatGPT directly into the browsing experience. Atlas is available starting today on macOS for Free, Plus, Pro, and Go users, with beta access for Business, Enterprise, and Education accounts. Versions for Windows, iOS, and Android are expected soon. The new browser builds on OpenAI’s earlier addition of web search to ChatGPT. Users can download Atlas from chatgpt.com/atlas and import bookmarks, passwords, and history from their existing browsers. According to OpenAI, Atlas allows ChatGPT to assist users directly on web pages, eliminating the need to switch tabs or copy and paste. The browser also supports ChatGPT’s memory feature.

6. Mistral Launched Its AI Studio Platform

Mistral AI Studio is a production-ready platform for enterprises to move beyond prototypes and run AI systems reliably at scale, offering tools for output tracking, feedback monitoring, fine-tuning, and governed deployments across secure environments; it centers on three pillars — Observability for tracing and evaluating model performance, an Agent Runtime built on Temporal for durable, transparent workflows, and an AI Registry as a system of record for models, datasets, and more — forming a continuous loop of creation, monitoring, and governance, with support for hybrid and self-hosted setups to maintain full data ownership.

Thought you might find this interesting: a virtual Cassyni seminar hosted by Royal Society Publishing:

The seminar explores using LLMs not just to summarize science but to generate testable hypotheses. It frames some “hallucinations” as potentially useful novel hypotheses, provided they’re verified experimentally, and ties the talk to a new Royal Society Interface article by the authors.

Speakers: Abbi Abdel Rehim (University of Cambridge), chaired by David Brown.

When: Wednesday, Nov 26, 2025, 4:30–5:30 PM IST (11:00 AM–12:00 PM UTC).

Five 5-minute reads/videos to keep you learning

1. Preparing for AI’s Economic Impact: Exploring Policy Responses

Anthropic outlines scenario-based policy ideas to manage AI’s uncertain labor and growth effects, drawing on its Economic Advisory Council and recent symposia. Anthropic frames these as research prompts, not endorsements, and notes a $10M expansion of its Economic Futures Program to fund further empirical work and public debate.

2. Building Smart AI Agents for Legal Tech: A Practitioner’s Playbook

This article serves as a guide for creating AI agents in the legal sector. It details two primary use cases — contract review and legal research — providing code examples for implementation. The author also presents ten key lessons, emphasizing the importance of choosing appropriate frameworks, managing costs, and establishing ethical guardrails. A central focus is on maintaining human-in-the-loop validation for accuracy and grounding all AI-generated claims in source documents.

3. Foundation of FastAPI

This article offers a foundational overview of FastAPI. It explains how the Python framework achieves its “Fast to Run” and “Fast to Code” philosophy by leveraging Starlette for asynchronous performance and Pydantic for data validation. It highlights FastAPI’s use of type hints to generate automatic data serialization and interactive documentation, reducing boilerplate code. The article also includes a practical guide to implementing core HTTP operations (GET, POST, PUT, and DELETE), complete with clear code examples.

4. Build an AI-Ready DevOps Stack on Ubuntu: MCP + n8n (Step-by-Step, With Code)

This guide integrates the Model Context Protocol (MCP) with the n8n workflow engine to demonstrate how to build an AI-powered DevOps automation stack on Ubuntu. It shows how to create a Python-based MCP server that triggers n8n workflows via webhooks, which, in turn, execute tasks using Bash scripts. The author also provides full code examples and covers practical considerations such as security, idempotency, and observability for a production-ready setup.

5. CUGA: IBM Research’s Open-Source Generalist Agent Framework — A Deep Technical Dive

IBM Research introduced CUGA (ConfigUrable Generalist Agent), an open-source framework designed for complex enterprise automation. Its modular architecture supports hybrid API and web execution, multiple reasoning modes, and integration with various LLM providers. This article walks you through architectural innovation, benchmark performance, setup, evaluation, and an example of how to use it in the real world.

Repositories & Tools

- Kvcached is a KV cache library for LLM serving/training on shared GPUs.

- WALT enables LLM agents to automatically discover and learn reusable tools from any website.

- Skill Seekers is an automated tool that transforms documentation websites, GitHub repositories, and PDF files into production-ready Claude AI skills.

- Agent Lightning turns any agent into an optimizable tool and can be built with any agent framework or even without one.

Top Papers of The Week

1. Efficient Long-context Language Model Training by Core Attention Disaggregation

This paper presents core attention disaggregation (CAD), a technique that improves long-context LLM training by decoupling the core attention computation, softmax(QK^T)V, from the rest of the model and executing it on a separate pool of devices. Implemented in DistCA, this approach optimizes the balance between compute and memory across 512 H200 GPUs, achieving up to 1.35x improvements in training throughput and eliminating stragglers in data and pipeline parallel groups.

2. Every Attention Matters: An Efficient Hybrid Architecture for Long-Context Reasoning

This paper presents the Ring-linear model series, featuring Ring-mini-linear-2.0 and Ring-flash-linear-2.0. The models integrate linear and softmax attention to enhance long-context inference. This hybrid architecture cuts inference costs to 1/10 those of a 32B-parameter model and reduces the original Ring series cost by over 50%. High-performance FP8 operators improve training efficiency by 50% while maintaining top performance on complex reasoning tasks.

3. DeepAgent: A General Reasoning Agent with Scalable Toolsets

This paper introduces DeepAgent, an end-to-end deep reasoning agent that performs autonomous thinking, tool discovery, and action execution within a single, coherent reasoning process. To address the challenges of long-horizon interactions, particularly the context length explosion from multiple tool calls and the accumulation of interaction history, it introduces an autonomous memory folding mechanism that compresses past interactions into structured episodic, working, and tool memories, reducing error accumulation while preserving critical information.

4. UltraCUA: A Foundation Model for Computer Use Agents with Hybrid Action

This paper introduces UltraCUA, a foundation model that builds a hybrid action space that lets an agent interleave low-level GUI actions with high-level programmatic tool calls. The model chooses the cheaper, more reliable option at each step. The approach improves success and reduces steps on OSWorld, and transfers to WindowsAgentArena without Windows-specific training.

5. FineVision: Open Data Is All You Need

This paper introduces FineVision, a unified, human-in-the-loop corpus of 24 million image-text samples from over 200 sources to standardize and decontaminate vision-language training data. It further applies rigorous de-duplication within and across sources and decontamination against 66 public benchmarks. Models trained on FineVision consistently outperform those trained on existing open mixtures across a broad evaluation suite.

Quick Links

1. Google’s Willow quantum chip has demonstrated the first verifiable quantum advantage by running the Quantum Echoes algorithm 13,000 times faster than classical supercomputers on molecular interactions via nuclear magnetic resonance. This verifiable result supports applications in drug discovery and materials science. The breakthrough emphasizes repeatable outcomes across quantum systems and experimental validation.

2. OpenAI expands Shared Projects. It is now available to Free, Plus, and Pro users for collaborative chats, files, and instructions in one space. It supports up to 100 collaborators per project and integrates with Canvas for visual editing. Aimed at teams, it reduces context-switching while maintaining privacy controls.

3. Reddit filed a lawsuit against Perplexity and three data brokers for unlawfully scraping its content via Google search results, bypassing official licensing channels. Reddit conducted a digital sting to prove its claims and invoked the DMCA. Previously, Reddit took similar actions against Anthropic, underscoring its strategy to protect and monetize its data.

Who’s Hiring in AI

AI Engineer @IFS (Chicago, IL, USA)

Generative AI Engineer @Sia (Mumbai, India)

Machine Learning Engineer @Cognitiv (San Mateo, CA, USA)

Machine Learning Engineer — Intern @KUNGFU.AI (US/Remote)

AI AWS Lead @Syngenta (Pune, India)

Junior Data Scientist @Blend360 (Atlanta, GA, USA)

AI Operations Manager @SuperAnnotate AI (US/Remote)

Interested in sharing a job opportunity here? Contact sponsors@towardsai.net.

Think a friend would enjoy this too? Share the newsletter and let them join the conversation.

Published via Towards AI