The Orienation Generation (RAG) is an approach to building AI systems that connects the language model with an external source of knowledge. Simply put, AI first searches for the relevant documents (such as articles or websites) related to the user's inquiry, and then uses these documents to generate a more accurate answer. This method was celebrated for help in large language models (LLM) in maintaining facts and reducing hallucinations by justifying their response in real data.

Intuitively one might think that the more documents recover artificial intelligence, the better her answer will be. However, recent studies suggest a surprising twist: when it comes to providing information to AI, sometimes there is more of it.

Less documents, better answers

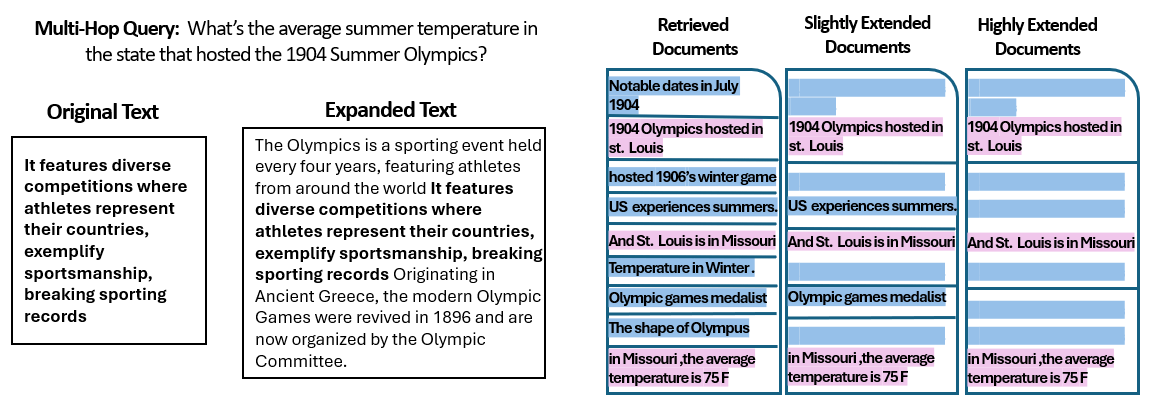

AND New study by scientists from the Hebrew University of Jerusalem, he studied how number documents given to the RAG system affect its performance. Most importantly, they maintained the total amount of text at a constant level – which means that if less documents were provided, these documents were slightly expanded to complete the same length as many documents. In this way, any differences in performance can be assigned the number of documents, not just a shorter contribution.

Scientists used a set of data answering questions (Musique) with questions with curiosities, each of which originally paired with 20 Wikipedia paragraphs (only a few of them contain the answer, and the rest are distracts). By cutting the number of documents from 20 to just 2-4 really important – and lining people with a slightly additional context to maintain a coherent length – they created scenarios in which artificial intelligence had fewer materials to consider, but still more or less the same whole words to read.

The results were striking. In most cases, AI models answered more precisely when they received fewer documents than a full set. The performance has improved significantly – in some cases by up to 10% accuracy (F1 result), when the system only used a handful of auxiliary documents instead of a large collection. This reinforcement contrary to intuition has been observed in several different models of Open-Source languages, including meta and others variants, which indicates that this phenomenon is not related to one AI model.

One model (Qwen-2) was a noteworthy exception that supported many documents without a decrease, but almost all the tested models worked better with fewer documents. In other words, adding more reference materials going beyond key important songs actually hurt their performance more often than helped.

Source: Levy et al.

Why is this such a surprise? Usually, RAG systems are designed, assuming that recovering a wider swath of information can only help AI – after all, if the answer is not in the first few documents, it can be in the tenth or twenty.

This study turns over this script, showing that the uncritical collection of additional documents can reverse. Even when the total length of the text was maintained at a constant level, the very presence of many different documents (of them with its own context and quirks) meant that the task responsible for more difficult questions for AI. It seems that, apart from a certain point, each additional document has introduced more noise than the signal, confusing the model and impairing its ability to extract the correct answer.

Why it may be less in a cloth

This result “less”, makes sense when we think about how the AI language models process information. When AI receives only the most appropriate documents, the context he sees is concentrated and free from distraction, just like the student who received the right pages for learning.

In the study, the models worked much better when they only received support documents, with removing irrelevant material. The remaining context was not only shorter, but also cleaner – it contained facts that directly indicated the answer and nothing more. With fewer documents for juggling, the model can pay full attention to relevant information, which is less likely that it is aside or confused.

On the other hand, when many documents were recovered and she had to break through the mixture of appropriate and insignificant content. Often these additional documents were “similar but unrelated” – they can share the subject or keywords with a question, but in fact they do not contain answers. This content can mislead the model. AI may lose effort by trying to connect dots between documents that do not really lead to the correct answer or, worse, it can incorrectly combine information from many sources. This increases the risk of hallucinations – cases in which AI generates an answer that sounds likely, but is not justified in any single source.

Basically, the transfer of too many documents to the model can dilute useful information and introduce contradictory details, hindering artificial intelligence to decide what is true.

Interestingly, scientists have found that if additional documents were obviously irrelevant (for example, random text), the models were better in ignoring them. Real troubles result from the scattering of data that look important: when all recovered texts are on similar topics, and assumes that all of them should use them, and can fight which details are actually important. It is in accordance with the observation of the study that Random distractions caused less confusion than realistic distractions at the entrance. AI can filter gross nonsense, but subtlely unprofitable information is a skillful trap-a manner is under the guise of meaning and derails the answer. By reducing the number of documents only to those really necessary, we avoid setting these traps.

There are also practical benefits: recovery and processing less documents reduces calculation costs for the RAG system. Each document that is drawn must be analyzed (built -in, read and frequented by the model), which uses time and computing resources. Eliminating unnecessary documents makes the system more efficient – it can find answers faster and at lower costs. In scenarios where accuracy improves, focusing on fewer sources, we get a win: better answers and a slimmer, more efficient process.

Source: Levy et al.

Rethinking rag: future tips

This new proof that quality often breaks the amount of recovery, has important implications for the future of AI systems that are based on external knowledge. This suggests that RAG designers should prioritize intelligent filtering and ranking of documents above the volume itself. Instead of downloading 100 possible fragments and hope that the answer is buried somewhere, only a few of the most important can be wiser.

The authors of the study emphasize the need to download methods “achieving balance between accuracy and diversity” in information provided to the model. In other words, we want to provide a sufficient relationship from the topic to answer the question, but not so much that the basic facts are drowned in the sea from foreign texts.

Going further, scientists will probably examine techniques that help AI models more gracefully support many documents. One approach is to develop better retriever systems or re -rankings that can determine which documents really add value and which only introduce conflict. Another angle is to improve the language models themselves: if one model (such as Qwen-2) coped with many documents without loss of accuracy, studying how it has been trained or structured, can offer tips on increasing the number of models. Perhaps future large language models will contain recognition mechanisms when two sources say the same (or contradictory) and focus accordingly. The goal would be to allow models to use a rich variety of sources without falling victim to confusion – effectively gaining the best of both worlds (information width and brightness of focus).

It is also worth noting that because AI systems gain larger contextual windows (the ability to read more text at the same time), simply dropping more data for monitors is not a silver ball. A greater context does not automatically mean a better understanding. This study shows that even if AI can technically read 50 pages at the same time, providing 50 pages of mixed quality information may not bring a good result. The model still uses the selection, appropriate content for work, not a mass screens. In fact, intelligent search can become even more important in the era of giant contextual windows – to ensure that the additional capacity is used for valuable knowledge rather than noise.

Arrangements with “More documents, the same length” (aptly entitled article) encourage you to re -examine our assumptions in AI research. Sometimes feeding all the data we have is not as effective as we think. Focusing on the most appropriate information, we not only improve the accuracy of the answers generated by AI, but also facilitate more efficient and easier to trust systems. This is a lesson contrary to intuition, but one with exciting consequences: future RAG systems can be both smarter and slimmer, carefully choosing less, better documents to recover.