What is AI search, and why is it completely reshaping SEO as we know it?

We’re no longer in the era where ranking #1 on Google guaranteed clicks. AI search now drives how users find answers by understanding meaning, not just matching words. Whether it’s Google’s AI Overviews, ChatGPT, or Perplexity, these tools don’t just index—they interpret, summarize, and respond. And if your content isn’t optimized for this shift, you’re likely invisible.

Let’s unpack what AI search really is, how it works, and how you can adapt before your traffic disappears for good.

What you’ll learn from this blog:

- What AI search is and how it works across platforms like ChatGPT, Google, and Perplexity.

- How AI search differs from traditional SEO and why rankings no longer guarantee visibility.

- The real impact of zero-click answers, semantic search, and LLMs on your traffic.

- How to measure and improve your AI visibility using Writesonic’s GEO tool.

What is AI search, and why does it matter?

AI search refers to search engines and tools that use artificial intelligence to understand queries contextually, not just literally. In short, AI search doesn’t just match exact keywords anymore like traditional search.

Instead, AI search determines what the user actually means, even if the query is vague, messy, or written in natural language.

At its core, AI in search engines is powered by natural language processing (NLP), machine learning (ML), and large language models (LLMs), such as GPT-4. These technologies work together to:

- Understand full sentences, not just keyword fragments.

- Infer user search intent based on phrasing, tone, and context.

- Pull answers from multiple sources, reframe them, and serve them back in plain language.

How AI search is different from traditional search:

In traditional search (like early Google):

- You type keywords.

- The engine looks for those exact words in documents.

- You get a list of links sorted by relevance and backlinks.

But with AI in search:

- You can ask a full question or give partial input.

- The system interprets what you mean, even if your phrasing is casual, long-winded, or typo-ridden.

- It gives you a summarized answer, sometimes without showing a single link.

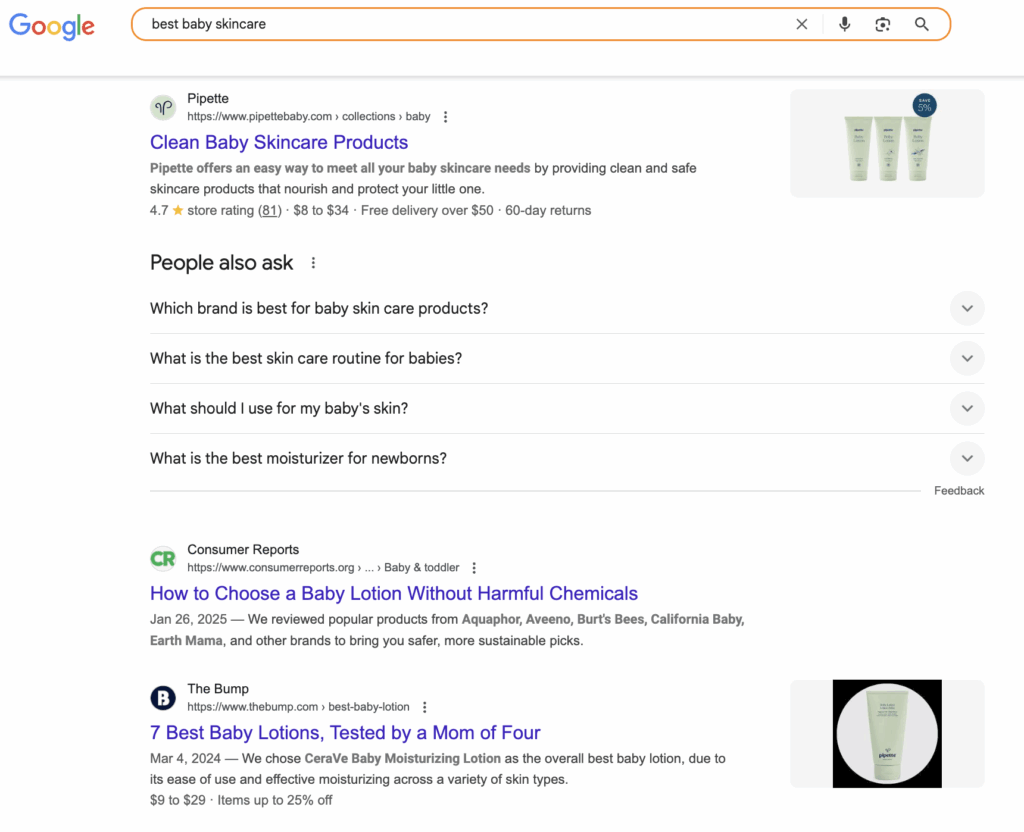

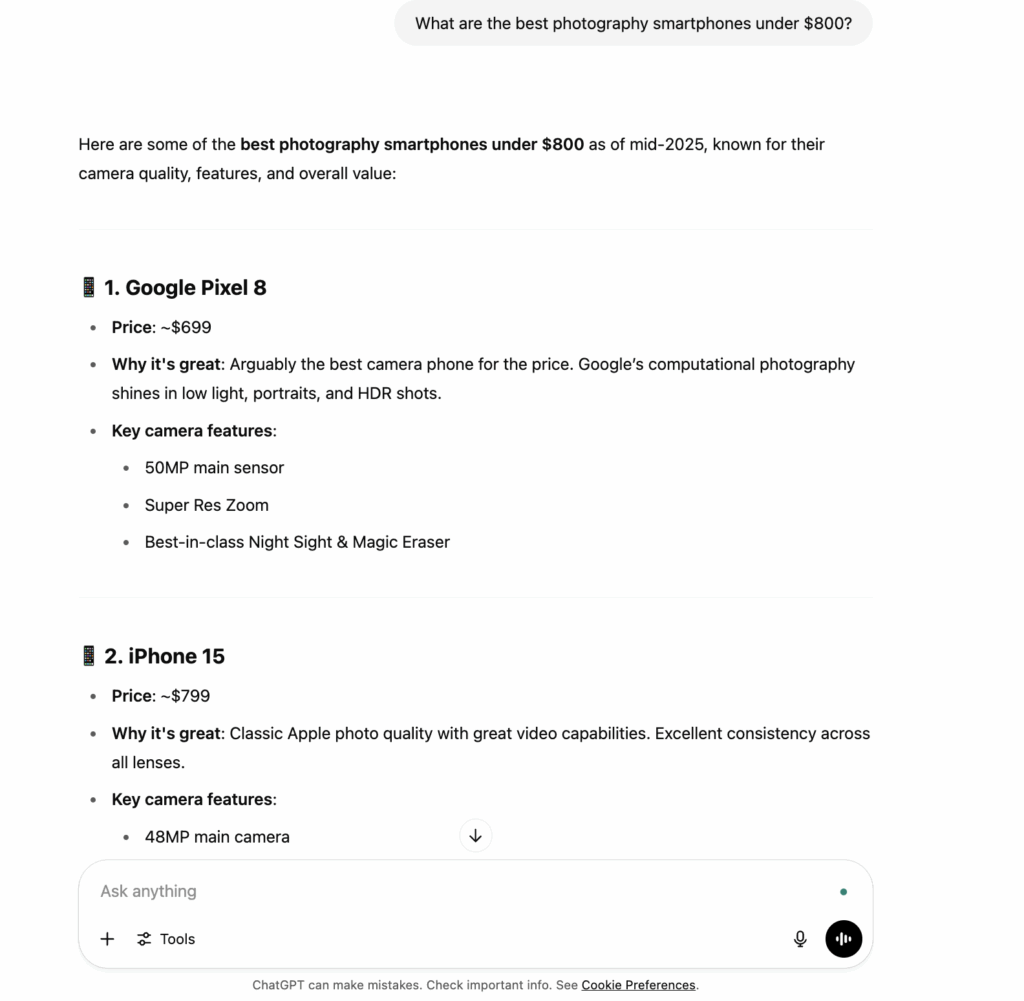

For example, if you search “best photography smartphone under $800” on Google, you’ll get a list of blog posts or tech review sites that match this phrase.

But if you enter the same query on ChatGPT, you’ll get a more conversational explanation, camera spec breakdowns, and a shortlist of models, without any manual link clicking.

Why AI search matters for visibility

Prioritizing AI visibility helps your content show up in tools like ChatGPT, Gemini, and Perplexity, regardless of your Google ranking. These AI systems extract and summarize content directly from websites, often without displaying search results or generating referral traffic.

If your content isn’t being read or cited by AI models, you’re losing visibility, even if your SEO is solid, and here’s why this shift matters:

- 61% of Gen Z and 53% of Millennials use AI tools instead of Google or other traditional search engines. This means you’re missing out on a large audience if you are not optimizing for AI search engines.

- 84% of search queries are now influenced by AI-generated results.

- Google’s rollout of AI Overviews means even traditional search is becoming answer-first, as 60% of searches are now complete without users clicking through to other websites.

- By 2026, traditional search engine volume is expected to decline by 25%, with search marketing losing market share to AI chatbots and other virtual agents.

Unfortunately, most marketing tools like Google Analytics and Search Console don’t show whether AI platforms like ChatGPT’s crawler or Perplexity’s indexer are visiting your site. That means:

- You don’t know if AI is citing your content.

- You can’t measure how often you’re mentioned in AI-generated answers.

- You have no idea where you stand against competitors in AI visibility.

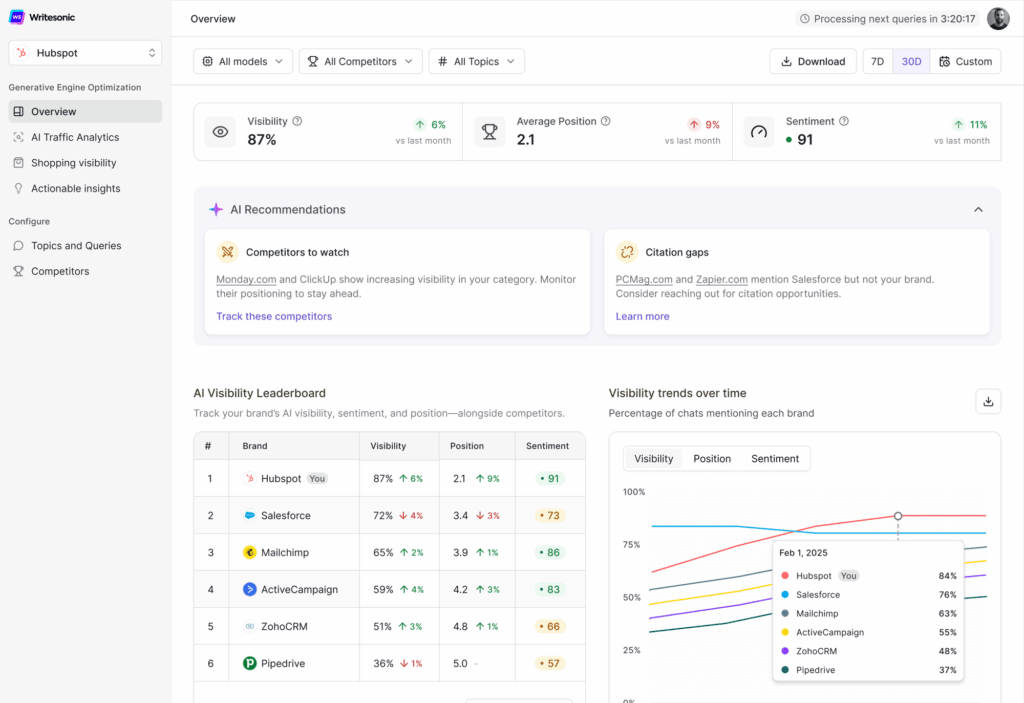

Writesonic’s GEO tool is built specifically to solve this blind spot. It tracks AI crawler activity across your website and gives you detailed performance insights across AI search platforms.

Here’s what you can track with this AI traffic analytics tool:

- AI crawler activity: See exactly which platforms (ChatGPT, Gemini, Claude, Perplexity, etc.) visit your site and how often.

- Visibility and sentiment score: Understand how often your brand appears in AI search results and your brand sentiment across the web.

- Competitor comparison: See how you stack up against others in your space.

You also gain access to actual AI prompts that mention your brand and identify citation gaps—queries where competitors are mentioned but you’re not.

How does AI search work?

Artificial intelligence in search engines doesn’t rely on just one system. It combines multiple technologies to understand human language, learn from interactions, retrieve relevant information, and generate accurate responses.

This includes natural language processing, machine learning, LLMs, predictive analytics, and RAG.

Here’s a breakdown of these core technologies behind AI search:

1. Data collection

At the foundation of AI search lies robust data collection. Search engines continuously gather vast amounts of information from:

- Web crawling: Scanning and indexing billions of web pages.

- User interactions: Analyzing search queries, clicks, and browsing patterns.

- Structured databases: Incorporating knowledge graphs and curated datasets.

- Real-time feeds: Integrating current news, social media trends, and live data streams.

This diverse data forms the bedrock upon which AI search builds its understanding and capabilities.

2. Natural language processing (NLP)

NLP helps AI understand the way humans naturally speak and write. It goes beyond keyword matching to interpret grammar, context, and user search intent.

Some of the techniques NLP uses:

- Tokenization: Breaking text into individual words or phrases.

- Part-of-speech tagging: Labeling verbs, nouns, and adjectives.

- Lemmatization and stemming: Reducing words to their base form.

- Named entity recognition: Identifying real-world entities like people, dates, and places.

For example, if you ask AI, “Do I need a jacket in Chicago tomorrow?” an NLP-powered system understands this as a weather query, not a clothing recommendation, and pulls relevant forecasts, even if the word “weather” isn’t used.

3. Machine learning algorithms (ML)

Machine learning helps AI search engines improve continuously by learning from user behavior. There are three primary types of ML at work:

- Supervised learning: Learns from labeled data, like user clicks or explicit feedback.

- Unsupervised learning: Finds hidden patterns in large datasets without labels.

- Reinforcement learning: Adjusts strategies over time based on what generates better outcomes.

These models help:

- Personalize search results based on user preferences.

- Re-rank content based on real-time engagement signals.

- Handle new, unseen queries by drawing from past search behaviors.

One of the earliest examples is Google’s RankBrain system, which utilizes machine learning (ML) to enhance the understanding of ambiguous or newly introduced queries.

4. Large language models (LLMs)

Large Language Models (LLMs) are advanced AI systems trained on massive datasets to generate natural-sounding responses. They work by predicting the next word in a sentence based on patterns learned during training. In AI search, LLM optimization includes:

- Generating full answers instead of just listing links.

- Summarizing complex topics using multiple sources.

- Conversational queries with context retention.

LLMs like GPT-4 (used in ChatGPT), Claude, and Gemini are already integrated into AI search engines, transforming how users consume information.

5. Predictive analytics

Predictive analytics allows AI to anticipate what the user might ask next. Based on past behavior, search history, and query trends, predictive systems can:

- Autocomplete queries as you type.

- Suggest relevant follow-up questions (like Google’s People Also Ask box)

- Surface content that aligns with likely user intent.

For instance, typing “best time to visit” quickly shows suggestions like “Japan,” “New York,” or “Italy in spring”—because the system predicts popular user paths based on real-time data and seasonal patterns.

6. Retrieval-augmented generation (RAG)

RAG bridges traditional search with generative AI to produce grounded, up-to-date answers.

First, the system retrieves relevant documents using search algorithms. Then, a language model reads that content and generates a response based on what it finds.

This reduces the risk of AI hallucinations (wrong or made-up facts) by grounding the output in actual source material.

Many AI search engines, such as Perplexity or Bing Copilot, utilize RAG to display citations alongside each response, enhancing transparency and trust.

This combination of NLP, machine learning, LLMs, predictive models, and retrieval pipelines powers modern AI search—and is what fundamentally differentiates it from traditional keyword-based engines.

7. Continuous learning

AI search engines are designed to evolve and improve over time. This continuous learning process involves:

- Feedback loops: Incorporating user interactions and explicit feedback to refine search algorithms.

- A/B testing: Constantly experimenting with new features and ranking methods to optimize performance.

- Adaptive models: Updating AI models to stay current with emerging trends, language shifts, and new information.

- Cross-domain learning: Applying insights from one type of search query to improve results in related areas.

This ongoing refinement ensures that AI search becomes increasingly accurate, relevant, and helpful as it processes more queries and adapts to changing user needs.

💡Learn more about: AI Search Optimization in 2025 and What Actually Works

Types of AI search technologies

1. Semantic search

Semantic search in AI focuses on understanding what you actually mean, not just matching the words you type.

Traditional search looks for exact word matches, whereas semantic search analyzes the relationships between words, your search history, and context to deliver more relevant results.

For example, when you search for “best laptops for graphic design students,” semantic search understands you need devices with powerful graphics cards, sufficient RAM, and color-accurate displays—even though you didn’t mention those specific features.

This intelligence is derived from natural language processing, which identifies patterns and relationships in data, enabling search engines to interpret your intent in a manner similar to how humans would.

2. Vector search

Vector search represents a major shift in how search engines process information—it works with mathematical representations instead of plain text.

This approach converts content (text, images, or other media) into numerical vectors called “embeddings.” These embeddings capture semantic meaning, allowing the system to find conceptually similar items even when exact keywords don’t match.

Vector search excels at matching across:

- Semantically similar content: Finding “canine” when you search for “dog.”

- Multiple languages: Locating “dog” in English when searching for “hund” in German.

- Different content types: Connecting text mentions of “dog” with actual photographs of dogs.

The system uses nearest neighbor algorithms that position similar vectors close together, creating efficient pathways for finding relevant content.

3. Generative AI search

Generative AI search goes beyond retrieving existing information—it creates new content in response to your queries.

These systems use large language models to generate direct answers, summaries, or conversational responses based on the information they access. Instead of returning lists of links, generative search provides synthesized responses that combine information from multiple sources.

Google’s AI Overviews exemplify this approach, giving you quick insights without requiring you to scroll through multiple sources.

This technology maintains context through conversations, allowing follow-up questions without repeating previous context. As these systems mature, the line between search and conversation continues to blur.

💡Related to your reading: What Is LLM Optimization and 12 Tips To Improve Your Brand Visibility

How AI search affects SEO and what you can do

AI search is rewriting SEO tactics. Instead of ranking links, AI summarizes content, interprets intent, and delivers zero-click answers. That means your content is judged less by SEO keywords and backlinks—and more by clarity, completeness, and contextual value.

1. Zero-click results are reducing organic traffic, even if your site is cited

In the era of AI-generated answers, visibility ≠ traffic.

Nearly 60% of Google searches in the U.S. now result in zero clicks due to the rise of AI-generated summaries, such as Google’s AI Overviews. And this number is even bigger on AI tools like ChatGPT or Perplexity.

For example, if you search “best SaaS onboarding tools” on Perplexity or Gemini. You’ll get a paragraph listing tools like Userpilot, Appcues, and Intercom. The content is compiled from various sources, but you may never know who wrote what unless you check the citations manually (and users rarely do).

This means that even if your article is powering the answer, you may still receive no traffic, no attribution, and no conversions.

What you can do to adapt to AI search patterns:

- Add brand-inclusive phrasing within your content (e.g., “Based on (YourBrand)’s 2024 survey of 120 SaaS teams…”).

- Use schema markup (especially FAQ, HowTo, WebPage) to give structure that AI models can parse.

- Monitor AI mentions using Writesonic’s GEO tool to understand if and how your content is used inside AI platforms, so it can be optimized better.

2. Keywords are no longer the main ranking signal—semantic and contextual coverage is

AI-powered search engines use vector search and embeddings to understand what a query “means,” not just what words it contains. That makes semantic understanding far more important than keyword density.

For instance, Perplexity may return the same results for these queries:

- “climate impact on agriculture”

- “how farming is affected by weather changes”

- “global warming crop yield issues”

All three queries may pull from content that doesn’t include those exact phrases.

Here’s what you can do to optimize for semantic coverage:

- Build comprehensive content hubs that cover the broader topic ecosystem (main guide + FAQs + comparisons + explainers).

- Pritorize semantic SEO to fill contextual gaps.

- Think in intent clusters, not keyword clusters, to cover what users actually want to learn across different angles.

3. E-E-A-T is now evaluated through actual language patterns, not backlinks

Large Language Models (LLMs) infer authority from writing style, clarity, content depth, and frequency of mention across reliable sources. They don’t have access to live backlink graphs like Google PageRank.

Instead, they’re trained on high-quality examples and repeat them in generated answers.

AI platforms prefer citing content that includes:

- First-person experience and use cases

- Verified data with sources

- Named authors with credentials

- Structured formatting (e.g., bullet lists, numbered steps, key takeaways)

To optimize for this, consider adding real author bios with credentials (such as LinkedIn profiles, certifications, and job titles) to your content or creating dedicated “About” pages for your website.

You should also highlight case studies, testimonials, or firsthand experiences wherever possible, as AI tools tend to pick up on this as authoritative language.

Finally, avoid using fluff language. LLMs recognize generic writing and deprioritize it in favor of original phrasing and confident tone.

4. Real-time retrievability and freshness are now visibility factors

Traditional SEO allowed evergreen content to dominate for years. That’s no longer the case.

What’s changed:

- AI systems prioritize content published or updated recently.

- Pages without structured timestamps or version info are less likely to be used.

- AI crawlers prefer content that is parseable and modular—no heavy JavaScript, slow load times, or confusing structure.

What to do:

- Include visible “Last updated” timestamps on all key pages.

- Add modular content blocks: TL;DR, key stats, comparison tables, etc.

- Check for crawl access for AI bots: ensure your robots.txt doesn’t block GPTBot, ClaudeBot, PerplexityBot, or CCBot.

5. You can’t track AI visibility with traditional SEO tools, so most brands are flying blind

Google Analytics, Search Console, Ahrefs, and Semrush do not track AI crawler activity. As a result, you can’t see when AI mentions your brand or which queries you appear in.

This creates a massive blind spot in your SEO efforts. To get ahead of this AI search race, Writesonic’s GEO tool is purpose-built to track AI search traffic that shows you:

- Which AI platforms are crawling your site

- How often they visit and which pages they prioritize

- Where your brand is being mentioned in AI-generated answers

- How your AI visibility compares against competitors in prompts and responses

- The exact user queries that trigger AI citations of your brand or content

This data gives you a generative search optimization scorecard, allowing you to adjust your content strategy based on AI-specific performance, not just Google SERP data.

In short, AI is not replacing traditional search or SEO; however, the rules have changed, and marketers need to modernize their strategies to stay competitive.

| Old SEO practices | AI search optimization |

| Rank higher to get more clicks | Being cited ≠ being seen. Zero-click answers dominate |

| Focus on keywords | Focus on intent, semantic coverage, and completeness |

| Build backlinks to win trust | Build real authority and first-hand credibility |

| Track Google rankings | Track AI visibility, crawler access, and citations |

If you’re not tracking or optimizing for how AI systems read, reuse, and rank your content, you’re only playing half the game.

💡You might also like: Will AI Replace SEO?

Benefits of AI search

AI search engines transcend traditional keyword-based systems by understanding user intent, context, and preferences. This evolution leads to more accurate, personalized, and efficient search experiences.

1. Contextual understanding enhances relevance

AI search leverages contextual signals—such as user location, device type, and search history—to interpret the true intent behind queries. This approach ensures that users receive results tailored to their specific needs and circumstances.

For example, a search for “jaguar” from a wildlife enthusiast’s profile might prioritize information about the animal, while the same query from a car enthusiast could highlight the automobile brand. This level of contextual awareness is achieved through advanced natural language processing and machine learning algorithms.

2. Personalized experiences drive engagement

By analyzing past interactions, AI search engines can present content that aligns closely with user interests, leading to increased satisfaction and loyalty.

A study by Sitecore highlights that personalized search not only delivers relevant results faster but also improves user engagement and customer retention. Tailored recommendations and adaptable content delivery are key factors in this enhanced user experience.

3. Handles typos and ambiguous queries

AI search systems are equipped with fuzzy matching algorithms that can interpret and correct misspelled or ambiguous queries. This capability ensures that users receive accurate results even when their input isn’t perfect.

For example, a query like “recieve latest updatse” would still yield relevant information about recent updates, thanks to the AI’s ability to recognize and correct common typographical errors.

4. Continuous learning improves accuracy over time

AI search engines continuously learn from user interactions, refining their algorithms to deliver increasingly accurate results. By analyzing patterns in user behavior—such as click-through rates and dwell time—these systems adapt to changing user needs and preferences.

This ongoing learning process ensures that the search experience becomes more intuitive and effective over time, aligning closely with individual user expectations.

5. Multimodal search expands accessibility

Multimodal search capabilities allow users to input queries through various formats, including text, voice, and images. This flexibility enhances accessibility and caters to diverse user preferences.

For instance, a user can upload a photo of a product and ask for similar items, or use voice input to search for information hands-free. This approach not only broadens the ways users can interact with search engines but also improves the precision and relevance of the results.

Risks and downsides of AI search

1. Data privacy and ethical concerns

AI search systems collect far more data than traditional search engines. They actively gather, process, and store your queries, often keeping this information for extended periods to improve their algorithms.

Research shows many AI search tools collect input data to personalize results and refine responses. As a result, platforms may link your AI search behavior with existing user profiles, connecting your search activity to other online habits.

This content usage raises additional ethical questions. When generating answers, many systems scrape and use content without publishers’ permission or proper attribution.

Studies have also found that over 60% of AI-generated responses contain incorrect or misleading information.

2. Dependence on high-quality training data

AI search tools are only as reliable as their training data. This dependence creates vulnerability to data quality issues that can seriously impact performance.

Modern AI applications need enormous quantities of training data. When that data is incomplete, erroneous, or inappropriate, you get unreliable models that make poor decisions.

Data sparsity (missing or insufficient information) presents another challenge. Training models on irrelevant data creates the same problems as using poor-quality data.

3. Bias in training data and results

AI search systems can inherit and amplify societal biases present in their training data. These systems inadvertently learn biases from training data and machine learning algorithms, then perpetuate them during deployment.

The consequences extend beyond simple inaccuracies.

For example, in employment situations, AI tools might screen job applicants by analyzing resumes based on historical hiring data. If that historical data contains gender or racial biases, the AI system learns and repeats those biases.

AI search results may also rate photos of women in more suggestive ways compared to images of men.

Tracking traffic from AI search with Writesonic

AI search has changed the rules. Your content might be powering ChatGPT or Gemini answers—but you’d never know from Google Analytics.

If you want to measure real visibility in AI search, traditional SEO tools won’t cut it. Writesonic’s GEO tool shows which AI platforms are crawling your site, what they’re reading, and where your brand is (or isn’t) being mentioned.

It’s the missing piece in your content strategy—because in the world of AI search, visibility without tracking is just guesswork.

Want to see how AI really sees your brand?