Author's): Gowtham Boyina

Originally published in Towards Artificial Intelligence.

Classic software development is deterministic. You code, you test, you deploy, and the result – if given the same input – is deterministic. The sequence of logic is predictable, as are the failure modes.

AI agents do not operate according to these rules. They do not reason procedurally, but probabilistically. If you have two of the same prompts, the actions will branch in different ways. The same tool calls will have different results. “It works on my machine” no longer applies when the machine itself learns, changes and reinterprets the context.

The Software Development Life Cycle (SDLC) falls apart in such circumstances because it assumes static behavior and fixed logic. AI agents in the enterprise need a completely different discipline—one in which behavior and reasoning are treated as measurable, controllable, and changing over time.

This is Agent Life Cycle (ADLC).

Why SDLC doesn't work for AI agents

1. Deterministic vs. probabilistic behavior

SDLC assumes that identical inputs produce identical results, enabling static testing and regression verification.

Agents act probabilistically. The same input data can lead to different paths of reasoning, invoking tools and conclusions. This unpredictability invalidates the SDLC's binary pass/fail testing model. ADLC replaces test cases assessment framework tracking performance distributions – measurements hallucinations, grounding, bias and safety instead of just correctness.

2. Static logic and adaptive systems

Traditional software remains static until it is re-deployed. Agents adapt dynamically through reinforcement, retraining, and continuous feedback. The SDLC update cycle does not allow for continuous adaptation.

ADLC deposition Execution time optimization loopsenabling live tuning of prompts, models and tools based on real-world telemetry.

3. Focus on implementation and focus on evaluation

SDLC defines success by implementation fidelity.

ADLC defines success based on behavioral performance – does the agent achieve enterprise KPIs within defined risk limits?

An agent with imperfect code but consistent, verifiable behavior meets ADLC standards; SDLC would incorrectly mark it as corrupt.

4. Closed testing and continuous certification

SDLC treats testing as a phase. The ADLC views this as an eternal discipline.

Agents must pass continuous behavioral certification: periodic red teaming, bias audits, compliance checks and retraining. Management catalogs replace static quality control reports; each agent release includes provenance, assessment metrics, and risk documentation.

5. Security through code and security through behavior

SDLC security mitigates code vulnerabilities.

ADLC security mitigates security vulnerabilities behavior — instant injections, misuse of tools, memory poisoning and target capture.

Agents request sandboxed execution, issuance of cryptographic identity, and Management at the MCP gateway levelenforcing runtime policies and limiting untrusted operations.

6. Linear model and continuous loops

SDLC progresses linearly (Plan → Build → Test → Deploy → Maintain).

ADLC works as cyclical framesembedding two continuous loops:

- Experiment Loop between Build AND Test for evaluation-based improvement.

- Runtime optimization loop between Work AND Monitor for live adaptation.

Agent systems evolve – so the lifecycle must evolve continuously.

7. Limited management versus total responsibility

The SDLC documentation ends when the implementation is signed off.

ADLC integrates governance, provenance and risk management in every phase.

Each agent has an owner, version, permission boundary, and audit trail. Its decisions can be reconstructed and its results linked to the measurable value of the enterprise.

ADLC framework

ADLC extends DevSecOps with six interdependent phases, managed by runtime experimentation and optimization loops.

1. Plan

Define use cases, measurable KPIs and acceptable risk limits. Establish behavioral specifications in natural language and assign them to evaluation criteria – accuracy, trust, compliance, latency, security.

Generate or synthesize datasets for testing. Plan management gateways: management approval, ethical standards and escalation procedures for agent autonomy.

2. Code and compilation

Develop quick service logic, memory mechanisms, and orchestration frameworks. Integration with enterprise APIs and MCP tools.

- Tool hybrid model architecture (boundary models + domains).

- Enforce safe by design practices: sandboxing, least privilege and traceable agent identity.

- Instrument observability hooks for inference traces, tool calls, and output.

Deliverables include code hints, tool diagrams, memory policies, and model manifests – all under version control.

3. Test, optimize, release

Check not only function, but also behavior. Testing now includes:

- Behavioral assessment (grounding, bias, hallucinations).

- Red team providing resistance to attacks.

- Compliance verification through the LLM-as-a-Judge (LLM-aaJ) framework.

Agents that pass all assessment thresholds are certified in managed directories with metadata (version, owner, origin and policy compliance).

4. Deploy

Deploy in hybrid or multi-cloud environments under tight security and compliance controls.

- Integrate MCP gateways for policy enforcement and observability.

- Switch on multi-agent orchestration to collaborative workflows.

- Implement rolling, rollback, and kill switch mechanisms.

The implementation is: management milestonenot handover.

5. Take action

Run agents as managed digital assets. Manage directories of all active agents along with their tools, permissions and audit trails.

Conduct continuous compliance audits, integrity analyses, and operational tuning.

Securely retire legacy agents with full data provenance and rating history.

6. Monitoring and runtime optimization loop

Establish real-time observability pipelines capturing telemetry on accuracy metrics, cost, latency, and drift.

Detect anomalies such as target manipulation, memory poisoning, or unauthorized tool calls.

Feed insights back into the optimization loop to refine prompts, retrain models, and dynamically rebalance resource allocation.

The basic monitoring question changes from “Is it working?” to “Is it correct, safe and fitting?”

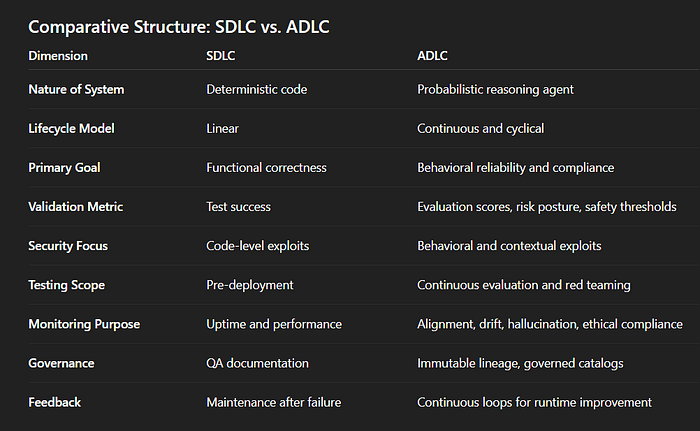

Comparison framework: SDLC vs. ADLC

Structural result

SDLC builds launching applications; Construction of the ADLC agents for this reason.

SDLC provides stability. ADLC delivers alignment.

Both share common DevSecOps roots, but ADLC introduces a new operational logic: each phase is observable, reversible, and empirically measured.

Traditional DevOps pipelines cannot manage agents that change behavior mid-term, use external tools autonomously, or make contextual decisions without clear code paths.

ADLC integrates security, compliance, and assessment into a self-correcting lifecycle – an adaptive feedback system that reflects the adaptive nature of the agents themselves.

New Discipline

AI agents are not code – they are evolving systems that reason, act and adapt. Treating them as software artifacts leads to operational failures, security vulnerabilities, and regulatory risks.

The Agent Lifecycle (ADLC) is not a trending framework – it is the operational framework for the next era of enterprise systems.

It recognizes the probabilistic nature of intelligence, embeds assessment at every stage, and extends DevSecOps into the domains of reasoning and autonomy.

Organizations that implement ADLC technology won't just create smarter software – they'll support managed, evolving intelligence that aligns with business intent.

Attribution:

Adapted from IBM's “Architecting Guide for Secure Enterprise AI Agents with MCP”, reviewed by Anthropic, TechXchange 2025.

Access the document here: Click this

Published via Towards AI